Re: memory leak in trigger handling (since PG12)

| От | Andres Freund |

|---|---|

| Тема | Re: memory leak in trigger handling (since PG12) |

| Дата | |

| Msg-id | 20230524181408.wlgzhoe7he7h3tjk@awork3.anarazel.de обсуждение исходный текст |

| Ответ на | Re: memory leak in trigger handling (since PG12) (Tomas Vondra <tomas.vondra@enterprisedb.com>) |

| Ответы |

Re: memory leak in trigger handling (since PG12)

(Tomas Vondra <tomas.vondra@enterprisedb.com>)

|

| Список | pgsql-hackers |

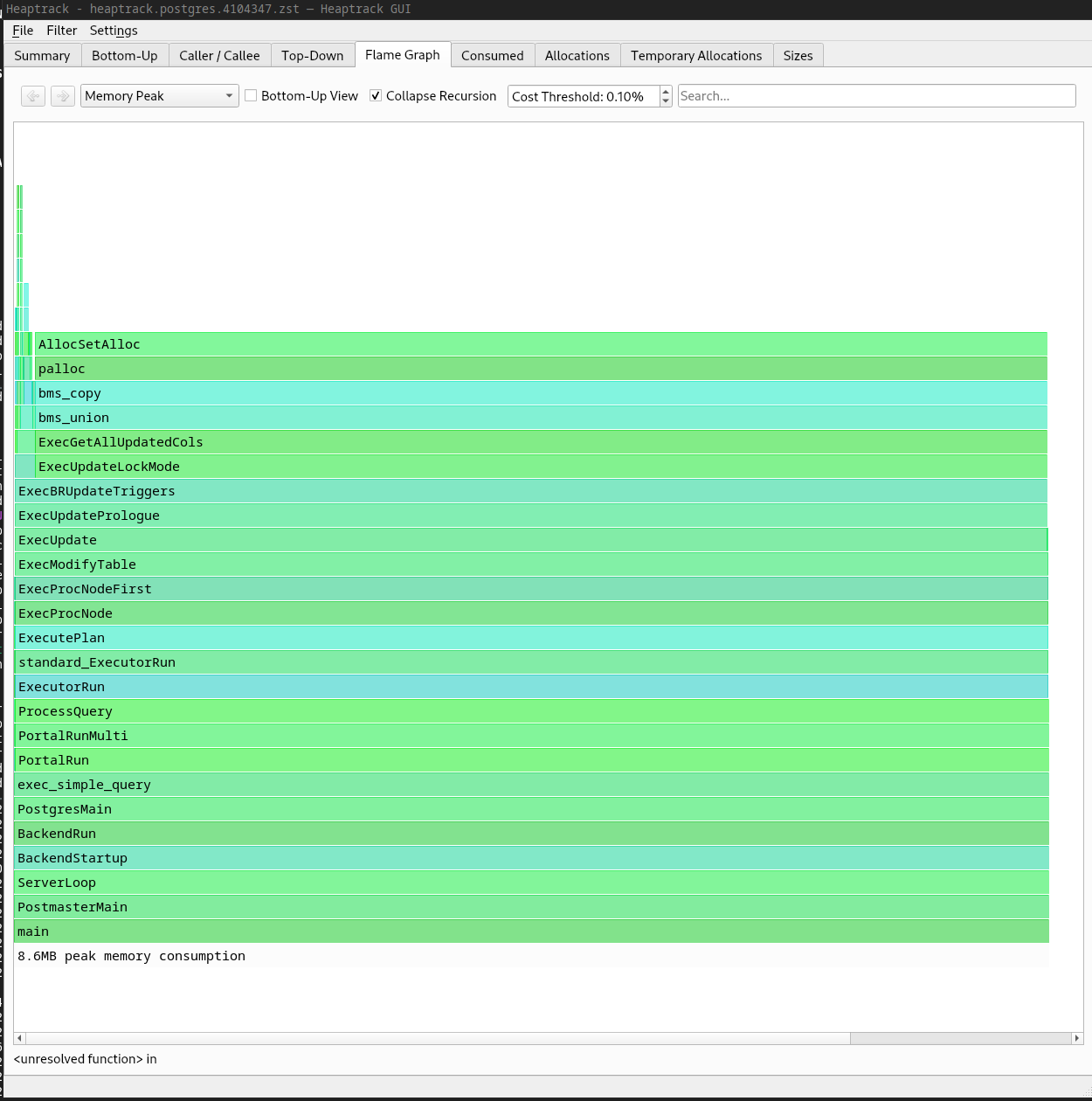

Hi, On 2023-05-23 23:26:42 +0200, Tomas Vondra wrote: > On 5/23/23 19:14, Andres Freund wrote: > > Hi, > > > > On 2023-05-23 18:23:00 +0200, Tomas Vondra wrote: > >> This means that for an UPDATE with triggers, we may end up calling this > >> for each row, possibly multiple bitmaps. And those bitmaps are allocated > >> in ExecutorState, so won't be freed until the end of the query :-( > > > > Ugh. > > > > > > I've wondered about some form of instrumentation to detect such issues > > before. It's obviously a problem that we can have fairly large leaks, like the > > one you just discovered, without detecting it for a couple years. It's kinda > > made worse by the memory context infrastructure, because it hides such issues. > > > > Could it help to have a mode where the executor shutdown hook checks how much > > memory is allocated in ExecutorState and warns if its too much? There's IIRC a > > few places that allocate large things directly in it, but most of those > > probably should be dedicated contexts anyway. Something similar could be > > useful for some other long-lived contexts. > > > > Not sure such simple instrumentation would help much, unfortunately :-( > > We only discovered this because the user reported OOM crashes, which > means the executor didn't get to the shutdown hook at all. Yeah, maybe > if we had such instrumentation it'd get triggered for milder cases, but > I'd bet the amount of noise is going to be significant. > > For example, there's a nearby thread about hashjoin allocating buffiles > etc. in ExecutorState - we ended up moving that to a separate context, > but surely there are more such cases (not just for ExecutorState). Yes, that's why I said that we would have to more of those into dedicated contexts - which is a good idea independent of this issue. > The really hard thing was determining what causes the memory leak - the > simple instrumentation doesn't help with that at all. It tells you there > might be a leak, but you don't know where did the allocations came from. > > What I ended up doing is a simple gdb script that sets breakpoints on > all palloc/pfree variants, and prints info (including the backtrace) for > each call on ExecutorState. And then a script that aggregate those to > identify which backtraces allocated most chunks that were not freed. FWIW, for things like this I found "heaptrack" to be extremely helpful. E.g. for a reproducer of the problem here, it gave me the attach "flame graph" of the peak memory usage - after attaching to a running backend and running an UPDATE triggering the leak.. Because the view I screenshotted shows the stacks contributing to peak memory usage, it works nicely to find "temporary leaks", even if memory is actually freed after all etc. > > Hm. Somehow this doesn't seem quite right. Shouldn't we try to use a shorter > > lived memory context instead? Otherwise we'll just end up with the same > > problem in a few years. > > > > I agree using a shorter lived memory context would be more elegant, and > more in line with how we do things. But it's not clear to me why we'd > end up with the same problem in a few years with what the patch does. Because it sets up the pattern of manual memory management and continues to run the relevant code within a query-lifetime context. Greetings, Andres Freund

Вложения

В списке pgsql-hackers по дате отправления: