Обсуждение: Bug on update timing of walrcv->flushedUpto variable

Hi, all

Recently, I found a bug on update timing of walrcv->flushedUpto variable, consider the following scenario, there is one Primary node, one Standby node which streaming from Primary:

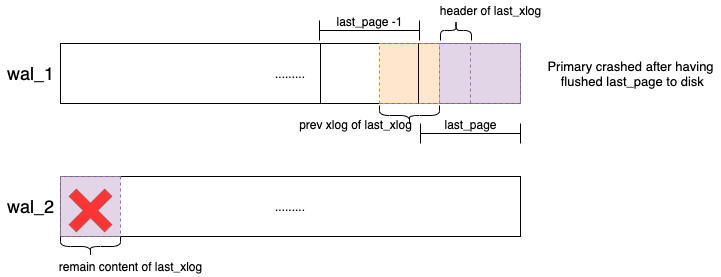

- There are a large number of SQL running in the Primary, and the length of the xlog record generated by these SQL maybe greater than the left space of current page so that it needs to be written cross pages. As shown below, the length of the last_xlog of wal_1 is greater than the left space of last_page, so it has to be written in wal_2. If Primary crashed after flused the last_page of wal_1 to disk, the remian content of last_xlog hasn't been flushed in time, then the last_xlog in wal_1 will be incomplete. And Standby also received the wal_1 by wal-streaming in this case.

- Primary restarts after crash, during the crash recovery, Primary will find that the last_xlog of wal_1 is invalid, and it will cover the space of last_xlog by inserting new xlog record. However, Standby won't do this, and there will be xlog inconsistency between Primary and standby at this time.

- When Standby restarts and replays the last_xlog, it will first get the content of XLogRecord structure (the header of last_xlog is completed flushed), and find that it has to reassemble the last_xlog, the next page of last_xlog is within wal_2, which not exists in pg_wal of Standby. So it request xlog streaming from Primary to get the wal_2, and update the walrcv->flushedUpto when it has received new xlog and flushed them to disk, now the value of walrcv->flushedUpto is some LSN within wal_2.

- Standby get wal_2 from Primary, but the content of the first page of wal_2 is not the remain content of last_xlog, which has already been covered by new xlog in Primary. Standby checked and found that the record is invalid, it will read the last_xlog again, and call the WaitForWALToBecomeAvailable function, in this function it will shutdown the wal-streaming and read the record from pg_wal.

- Again, the record read from pg_wal is also invalid, so Standby will request wal-streaming again, and it is worth noting that the value of walrcv->flushedUpto has already been set to wal_2 before, which is greater than the LSN Standby needs, so the variable havedata in WaitForWALToBecomeAvailable is always true, and Standby considers that it received the xlog, it will read the content from wal_2.

- Next is the endless loop: Standby found the xlog is invalid -> read the last_xlog again -> shutdown wal-streaming and read xlog from pg_wal -> found the xlog is invalid -> request wal-streaming, expect to get the correct xlog, but it will return from WaitForWALToBecomeAvailable immediately because the walrcv->flushedUpto is always greater than the LSN it needs ->read and found the xlog is invalid -> read the last_xlog again ->......

- In this case, Standby will never get the correct xlog record until it restarts

- The confusing point is: why only updates the walrcv->flushedUpto at the first startup of walreceiver on a specific timeline, not each time when request xlog streaming? In above case, it is also reasonable to update walrcv->flushedUpto to wal_1 when Standby re-receive wal_1. So I changed to update the walrcv->flushedUpto each time when request xlog streaming, which is the patch I want to share with you, based on postgresql-13.2, what do you think of this change?

By the way, I also want to know why call pgstat_reset_all function during recovery process?

Thanks & Best Regard

Вложения

Hi. (Added Nathan, Andrey and Heikki in Cc:) At Fri, 26 Mar 2021 23:44:21 +0800, "蔡梦娟(玊于)" <mengjuan.cmj@alibaba-inc.com> wrote in > Hi, all > > Recently, I found a bug on update timing of walrcv->flushedUpto variable, consider the following scenario, there is onePrimary node, one Standby node which streaming from Primary: > There are a large number of SQL running in the Primary, and the length of the xlog record generated by these SQL maybegreater than the left space of current page so that it needs to be written cross pages. As shown below, the length ofthe last_xlog of wal_1 is greater than the left space of last_page, so it has to be written in wal_2. If Primary crashedafter flused the last_page of wal_1 to disk, the remian content of last_xlog hasn't been flushed in time, then thelast_xlog in wal_1 will be incomplete. And Standby also received the wal_1 by wal-streaming in this case. It seems like the same with the issue discussed in [1]. There are two symptom of the issue, one is that archive ends with a segment that ends with a immature WAL record, which causes inconsistency between archive and pg_wal directory. Another is , as you saw, walreceiver receives an immature record at the end of a segment, which prevents recovery from proceeding. In the thread, trying to solve that by preventing such an immature records at a segment boundary from being archived and inhibiting being sent to standby. > [日志1.png] It doesn't seem attached.. > The confusing point is: why only updates the walrcv->flushedUpto at the first startup of walreceiver on a specific timeline,not each time when request xlog streaming? In above case, it is also reasonable to update walrcv->flushedUpto towal_1 when Standby re-receive wal_1. So I changed to update the walrcv->flushedUpto each time when request xlog streaming,which is the patch I want to share with you, based on postgresql-13.2, what do you think of this change? > > By the way, I also want to know why call pgstat_reset_all function during recovery process? We shouldn't rewind flushedUpto to backward. The variable notifies how far recovery (or startup process) can read WAL content safely. Once startup process reads the beginning of a record, XLogReadRecord tries to continue fetching *only the rest* of the record, which is inconsistent from the first part in this scenario. So at least only this fix doesn't work fine. And we also need to fix the archive inconsistency, maybe as a part of a fix for this issue. We are trying to fix this by refraining from archiving (or streaming) until a record crossing a segment boundary is completely flushed. regards. [1] https://www.postgresql.org/message-id/CBDDFA01-6E40-46BB-9F98-9340F4379505%40amazon.com -- Kyotaro Horiguchi NTT Open Source Software Center

Hi.

I still feel confused about some point, hope to get your answer:

1) You said that "We shouldn't rewind flushedUpto to backward. The variable notifies how far recovery (or startup process) can read WAL content safely. "

This fix only rewinds flushedUpto when request wal streaming, and rewind flushedUpto means it is re-receiving one wal segment file, which indicates that there maybe some wrong data in previous one. flushedUpto nitifies how far startup process can read WAL content safely because WAL before that has been flushed to disk. However, this doesn't mean it is correct to read the WAL because the content maybe invalid. And if we rewind flushedUpto, WaitForWALToBecomeAvailable function returns true only when the WAL re-received is greater than what XLogReadRecord needs, at this time it is correct and also safe to read the content.

By the way, what do you think of updating flushedUpto to the LogstreamResult.Flush when start streaming not when request streaming, the value of LogstreamResult.Flush is set to replayPtr when start streaming.

2) You said that "Once startup process reads the beginning of a record, XLogReadRecord tries to continue fetching *only the rest* of the record, which is inconsistent from the first part in this scenario."

If XLogReadRecord found the record is invalid, it will read the whole record again, inlcude the beginning of the record, not only the rest of the record. Am I missing something?

3)I wonder how are you going to acheive preventing an immature records at a segment boundary from being archived and inhibiting being sent to standby

Thanks & Best Regard

------------------------------------------------------------------发件人:Kyotaro Horiguchi <horikyota.ntt@gmail.com>发送时间:2021年3月29日(星期一) 09:54收件人:蔡梦娟(玊于) <mengjuan.cmj@alibaba-inc.com>抄 送:bossartn <bossartn@amazon.com>; x4mmm <x4mmm@yandex-team.ru>; hlinnaka <hlinnaka@iki.fi>; pgsql-hackers <pgsql-hackers@lists.postgresql.org>主 题:Re: Bug on update timing of walrcv->flushedUpto variableHi.

(Added Nathan, Andrey and Heikki in Cc:)

At Fri, 26 Mar 2021 23:44:21 +0800, "蔡梦娟(玊于)" <mengjuan.cmj@alibaba-inc.com> wrote in

> Hi, all

>

> Recently, I found a bug on update timing of walrcv->flushedUpto variable, consider the following scenario, there is one Primary node, one Standby node which streaming from Primary:

> There are a large number of SQL running in the Primary, and the length of the xlog record generated by these SQL maybe greater than the left space of current page so that it needs to be written cross pages. As shown below, the length of the last_xlog of wal_1 is greater than the left space of last_page, so it has to be written in wal_2. If Primary crashed after flused the last_page of wal_1 to disk, the remian content of last_xlog hasn't been flushed in time, then the last_xlog in wal_1 will be incomplete. And Standby also received the wal_1 by wal-streaming in this case.

It seems like the same with the issue discussed in [1].

There are two symptom of the issue, one is that archive ends with a

segment that ends with a immature WAL record, which causes

inconsistency between archive and pg_wal directory. Another is , as

you saw, walreceiver receives an immature record at the end of a

segment, which prevents recovery from proceeding.

In the thread, trying to solve that by preventing such an immature

records at a segment boundary from being archived and inhibiting being

sent to standby.

> [日志1.png]

It doesn't seem attached..

> The confusing point is: why only updates the walrcv->flushedUpto at the first startup of walreceiver on a specific timeline, not each time when request xlog streaming? In above case, it is also reasonable to update walrcv->flushedUpto to wal_1 when Standby re-receive wal_1. So I changed to update the walrcv->flushedUpto each time when request xlog streaming, which is the patch I want to share with you, based on postgresql-13.2, what do you think of this change?

>

> By the way, I also want to know why call pgstat_reset_all function during recovery process?

We shouldn't rewind flushedUpto to backward. The variable notifies how

far recovery (or startup process) can read WAL content safely. Once

startup process reads the beginning of a record, XLogReadRecord tries

to continue fetching *only the rest* of the record, which is

inconsistent from the first part in this scenario. So at least only

this fix doesn't work fine. And we also need to fix the archive

inconsistency, maybe as a part of a fix for this issue.

We are trying to fix this by refraining from archiving (or streaming)

until a record crossing a segment boundary is completely flushed.

regards.

[1] https://www.postgresql.org/message-id/CBDDFA01-6E40-46BB-9F98-9340F4379505%40amazon.com

--

Kyotaro Horiguchi

NTT Open Source Software Center