Обсуждение: Compressed binary field

Hi, My application have few binary fields that accept files. Most of them are XML files archived for reference only. I would like to know if there is any plan to implement compressed fields (just a "flag" in the field definition, like "not null") at database side (these fields are and will never be indexed neither used for search). Besides I know there are other options for file storage (like filesystem, etc), but database is so convenient and I really don't want to push application architecture now (it will replicate automatically, is available at an device I wish without sharing folders, etc). Thanks, Edson.

Edson Richter <edsonrichter@hotmail.com> writes: > I would like to know if there is any plan to implement compressed fields > (just a "flag" in the field definition, like "not null") at database > side (these fields are and will never be indexed neither used for search). Any field value over a couple kilobytes is compressed automatically; this has been true for more than a dozen years. You can turn that off if you want, but it's done by default. http://www.postgresql.org/docs/9.1/static/storage-toast.html regards, tom lane

Em 10/09/2012 15:35, Tom Lane escreveu: > Edson Richter <edsonrichter@hotmail.com> writes: >> I would like to know if there is any plan to implement compressed fields >> (just a "flag" in the field definition, like "not null") at database >> side (these fields are and will never be indexed neither used for search). > Any field value over a couple kilobytes is compressed automatically; > this has been true for more than a dozen years. You can turn that > off if you want, but it's done by default. > > http://www.postgresql.org/docs/9.1/static/storage-toast.html > > regards, tom lane > Hi, Tom! I've read this section of manual, but I was wondering (problably I did not fully understand the manual, maybe too technical or just my poor english suffering here) if there is a way to force all these filed to be compressed no matter size. I actually have more than 250,000 files in database with 7Gb on size (about this amount every 6 months). Thanks, Edson

Em 10/09/2012 16:09, Edson Richter escreveu: > Em 10/09/2012 15:35, Tom Lane escreveu: >> Edson Richter <edsonrichter@hotmail.com> writes: >>> I would like to know if there is any plan to implement compressed >>> fields >>> (just a "flag" in the field definition, like "not null") at database >>> side (these fields are and will never be indexed neither used for >>> search). >> Any field value over a couple kilobytes is compressed automatically; >> this has been true for more than a dozen years. You can turn that >> off if you want, but it's done by default. >> >> http://www.postgresql.org/docs/9.1/static/storage-toast.html >> >> regards, tom lane >> > Hi, Tom! > > I've read this section of manual, but I was wondering (problably I did > not fully understand the manual, maybe too technical or just my poor > english suffering here) if there is a way to force all these filed to > be compressed no matter size. I actually have more than 250,000 files > in database with 7Gb on size (about this amount every 6 months). > > Thanks, > > Edson > > Also, this automatic compression applies to bytea fields? Edson

Edson Richter <edsonrichter@hotmail.com> wrote: > this automatic compression applies to bytea fields? Yes, but keep in mind that anything which is already compressed or encrypted will probably not compress much if at all. Many of the binary objects you might want to store in the database probably already use compression internally. -Kevin

Em 10/09/2012 19:06, Kevin Grittner escreveu: > Edson Richter <edsonrichter@hotmail.com> wrote: > >> this automatic compression applies to bytea fields? > > Yes, but keep in mind that anything which is already compressed or > encrypted will probably not compress much if at all. Many of the > binary objects you might want to store in the database probably > already use compression internally. > > -Kevin My files are mostly XML, TXT and PDF files only. I already have separate tables for storing files, and avoid to query them together with other stuff. So, should I use alter table MYTABLE set storage EXTENDED ? Thanks, Edson

Edson Richter wrote: > So, should I use > > alter table MYTABLE set storage EXTENDED Don't bother; that is the default. This should already be happening automatically. Is there some problem you're seeing that you want to fix? If so, you should probably describe that. -Kevin

Em 11/09/2012 09:40, Kevin Grittner escreveu: > Edson Richter wrote: > >> So, should I use >> >> alter table MYTABLE set storage EXTENDED > > Don't bother; that is the default. > > This should already be happening automatically. Is there some > problem you're seeing that you want to fix? If so, you should > probably describe that. > > -Kevin No, there is no problem. Just trying to reduce database size forcing these fields to compress. Actual database size = 8Gb Backup size = 1.6Gb (5x smaller) Seems to me (IMHO) that there is room for improvement in database storage (we don't have many indexes, and biggest tables are just the ones with bytea fields). That's why I've asked for experts counseling. Regards, Edson.

Edson Richter <edsonrichter@hotmail.com> wrote: > there is no problem. Just trying to reduce database size > Actual database size = 8Gb > Backup size = 1.6Gb (5x smaller) > > Seems to me (IMHO) that there is room for improvement in database > storage (we don't have many indexes, and biggest tables are just > the ones with bytea fields). That's why I've asked for experts > counseling. What version of PostgreSQL is this? How are you measuring the size? Where is the space going? (Heap files? TOAST files? Index files? WAL files? Free space maps? Visibility maps? Server logs? Temporary files?) You aren't creating a separate table with one row for each binary object, are you? I only ask this because in an earlier post you mentioned having a quarter million files in the database, and in a production database which has been running for years with over 400 user tables and lots of indexes I only have about 4000 files in the whole database cluster. A separate table for each object would be disastrous for both performance and space usage. -Kevin

Em 11/09/2012 14:00, Kevin Grittner escreveu: > Edson Richter <edsonrichter@hotmail.com> wrote: > >> there is no problem. Just trying to reduce database size > >> Actual database size = 8Gb >> Backup size = 1.6Gb (5x smaller) >> >> Seems to me (IMHO) that there is room for improvement in database >> storage (we don't have many indexes, and biggest tables are just >> the ones with bytea fields). That's why I've asked for experts >> counseling. > > What version of PostgreSQL is this? 9.1.5 on Linux x64 (CentOS 5) > > How are you measuring the size? For storage, du -h --max-depth 1 on data directory gives me the amount of data. > Where is the space going? (Heap files? TOAST files? Index files? > WAL files? Free space maps? Visibility maps? Server logs? > Temporary files?) Biggest objects are just the tables with files. > You aren't creating a separate table with one row for each binary > object, are you? I only ask this because in an earlier post you > mentioned having a quarter million files in the database, and in a > production database which has been running for years with over 400 > user tables and lots of indexes I only have about 4000 files in the > whole database cluster. A separate table for each object would be > disastrous for both performance and space usage. I've 2 tables that held all these objects. Structure is create table MYTABLE (id bigint not null primary key, mimetype varchar(100) null, bytea datafile null) Regards, Edson. > > -Kevin > >

Edson Richter <edsonrichter@hotmail.com> wrote:

> For storage, du -h --max-depth 1 on data directory gives me the

> amount of data.

> Biggest objects are just the tables with files.

> I've 2 tables that held all these objects. Structure is

>

> create table MYTABLE (id bigint not null primary key, mimetype

> varchar(100) null, bytea datafile null)

Could you show the results of this query?:

SELECT relkind, oid, relfilenode, reltoastrelid,

relpages, reltuples

FROM pg_class

ORDER BY relpages DESC

LIMIT 10;

Also, just to be sure that all calculations are based on your actual

build, can you show the results of?:

SHOW block_size;

Have you checked the level of bloat yet? (Perhaps autovacuum needs

to be made more aggressive.)

-Kevin

Em 11/09/2012 14:34, Kevin Grittner escreveu:

Edson Richter <edsonrichter@hotmail.com> wrote:For storage, du -h --max-depth 1 on data directory gives me the amount of data.Biggest objects are just the tables with files.I've 2 tables that held all these objects. Structure is create table MYTABLE (id bigint not null primary key, mimetype varchar(100) null, bytea datafile null)Could you show the results of this query?: SELECT relkind, oid, relfilenode, reltoastrelid, relpages, reltuples FROM pg_class ORDER BY relpages DESC LIMIT 10;

| relkind | oid | relfilenode | reltoastrelid | relpages | reltuples |

|---|---|---|---|---|---|

| r | 312470 | 1043546 | 312492 | 29321 | 639571 |

| r | 312585 | 1043643 | 0 | 22732 | 1.80617e+06 |

| r | 312522 | 1043578 | 312527 | 19769 | 724210 |

| r | 312749 | 1043773 | 312753 | 14307 | 928538 |

| r | 312758 | 1043763 | 0 | 10488 | 917134 |

| r | 312498 | 1043525 | 0 | 7689 | 640572 |

| r | 312802 | 1043804 | 312810 | 7670 | 172789 |

| r | 312964 | 1044076 | 0 | 7586 | 385833 |

| i | 1041923 | 1043648 | 0 | 6958 | 1.80617e+06 |

| r | 312815 | 1043825 | 312819 | 6684 | 715081 |

8192Also, just to be sure that all calculations are based on your actual build, can you show the results of?: SHOW block_size;

Besides autocacuum, I usually run Vacuum Full on weekly basis. My calculations came after Vacuum Full.Have you checked the level of bloat yet? (Perhaps autovacuum needs to be made more aggressive.)

Edson.

-Kevin

Edson Richter <edsonrichter@hotmail.com> wrote: > Em 11/09/2012 14:34, Kevin Grittner escreveu: >> Edson Richter <edsonrichter@hotmail.com> wrote: >> >>> For storage, du -h --max-depth 1 on data directory gives me the >>> amount of data. >> >>> Biggest objects are just the tables with files. >> >>> I've 2 tables that held all these objects. Structure is >>> >>> create table MYTABLE (id bigint not null primary key, mimetype >>> varchar(100) null, bytea datafile null) >> >> Could you show the results of this query?: >> >> SELECT relkind, oid, relfilenode, reltoastrelid, >> relpages, reltuples >> FROM pg_class >> ORDER BY relpages DESC >> LIMIT 10; > [biggest relation was a table heap with 29321 pages] >> Also, just to be sure that all calculations are based on your >> actual build, can you show the results of?: >> >> SHOW block_size; > 8192 So your biggest table is actually 229 MB. Something is not adding up. I can't see any way to reconcile your previous statements with this number. There also hasn't been any real explanation for the statement that you have 250000 files. There must be something which matters here which hasn't yet been mentioned. Any ideas? -Kevin

Em 11/09/2012 14:59, Kevin Grittner escreveu: > Edson Richter <edsonrichter@hotmail.com> wrote: >> Em 11/09/2012 14:34, Kevin Grittner escreveu: >>> Edson Richter <edsonrichter@hotmail.com> wrote: >>> >>>> For storage, du -h --max-depth 1 on data directory gives me the >>>> amount of data. >>> >>>> Biggest objects are just the tables with files. >>> >>>> I've 2 tables that held all these objects. Structure is >>>> >>>> create table MYTABLE (id bigint not null primary key, mimetype >>>> varchar(100) null, bytea datafile null) >>> >>> Could you show the results of this query?: >>> >>> SELECT relkind, oid, relfilenode, reltoastrelid, >>> relpages, reltuples >>> FROM pg_class >>> ORDER BY relpages DESC >>> LIMIT 10; > >> [biggest relation was a table heap with 29321 pages] > >>> Also, just to be sure that all calculations are based on your >>> actual build, can you show the results of?: >>> >>> SHOW block_size; >> 8192 > > So your biggest table is actually 229 MB. Something is not adding > up. I can't see any way to reconcile your previous statements with > this number. There also hasn't been any real explanation for the > statement that you have 250000 files. There must be something which > matters here which hasn't yet been mentioned. Any ideas? > > -Kevin I don't know why, look result of the following query (arquivo is the bytea field): select count(*) from notafiscalarq where arquivo is not null; count -------- 715084 Strange, huh? Edson. > >

Em 12/09/2012 00:37, Edson Richter escreveu:

Em 11/09/2012 14:59, Kevin Grittner escreveu:Edson Richter <edsonrichter@hotmail.com> wrote:I don't know why, look result of the following query (arquivo is the bytea field):Em 11/09/2012 14:34, Kevin Grittner escreveu:Edson Richter <edsonrichter@hotmail.com> wrote:

For storage, du -h --max-depth 1 on data directory gives me the

amount of data.Biggest objects are just the tables with files.I've 2 tables that held all these objects. Structure isCould you show the results of this query?:

create table MYTABLE (id bigint not null primary key, mimetype

varchar(100) null, bytea datafile null)

SELECT relkind, oid, relfilenode, reltoastrelid,

relpages, reltuples

FROM pg_class

ORDER BY relpages DESC

LIMIT 10;[biggest relation was a table heap with 29321 pages]So your biggest table is actually 229 MB. Something is not addingAlso, just to be sure that all calculations are based on your8192

actual build, can you show the results of?:

SHOW block_size;

up. I can't see any way to reconcile your previous statements with

this number. There also hasn't been any real explanation for the

statement that you have 250000 files. There must be something which

matters here which hasn't yet been mentioned. Any ideas?

-Kevin

select count(*) from notafiscalarq where arquivo is not null;

count

--------

715084

Strange, huh?

Edson.

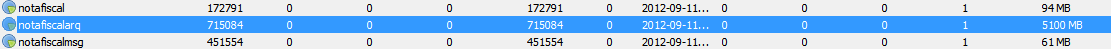

Look at the size (5100MB) of this table alone (got after Vacuum with PgAdmin 14):

This table has no deletes or updates, only inserts. It relates to the one above (notafiscal) in a 1:1 relationship.

Regards,

Edson.

Вложения

Edson Richter wrote: > Em 12/09/2012 00:37, Edson Richter escreveu: >> Em 11/09/2012 14:59, Kevin Grittner escreveu: >>> Edson Richter wrote: >>>> [biggest relation was a table heap with 29321 pages] >>>> [block size is 8 KB] >>> So your biggest table is actually 229 MB. Something is not adding >>> up. I can't see any way to reconcile your previous statements >>> with this number. There also hasn't been any real explanation for >>> the statement that you have 250000 files. There must be something >>> which matters here which hasn't yet been mentioned. Any ideas? >> I don't know why, look result of the following query (arquivo is >> the bytea field): >> >> select count(*) from notafiscalarq where arquivo is not null; >> count >> -------- >> 715084 What is a count of active rows in that table supposed to show me? > Look at the size (5100MB) of this table alone (got after Vacuum > with PgAdmin 14): Please show (copy/paste if possible) *exactly* how you arrived at that number and *exactly* how you determined that this number represented the size of a table and how you determined which table. If the results you previously posted are from the same database, you simply don't have a table heap that large. -Kevin

Em 12/09/2012 09:16, Kevin Grittner escreveu:

> Edson Richter wrote:

>> Em 12/09/2012 00:37, Edson Richter escreveu:

>>> Em 11/09/2012 14:59, Kevin Grittner escreveu:

>>>> Edson Richter wrote:

>

>>>>> [biggest relation was a table heap with 29321 pages]

>>>>> [block size is 8 KB]

>

>>>> So your biggest table is actually 229 MB. Something is not adding

>>>> up. I can't see any way to reconcile your previous statements

>>>> with this number. There also hasn't been any real explanation for

>>>> the statement that you have 250000 files. There must be something

>>>> which matters here which hasn't yet been mentioned. Any ideas?

>

>>> I don't know why, look result of the following query (arquivo is

>>> the bytea field):

>>>

>>> select count(*) from notafiscalarq where arquivo is not null;

>>> count

>>> --------

>>> 715084

>

> What is a count of active rows in that table supposed to show me?

>

>> Look at the size (5100MB) of this table alone (got after Vacuum

>> with PgAdmin 14):

>

> Please show (copy/paste if possible) *exactly* how you arrived at

> that number and *exactly* how you determined that this number

> represented the size of a table and how you determined which table.

> If the results you previously posted are from the same database, you

> simply don't have a table heap that large.

>

> -Kevin

Ok, maybe I've used wrong database by mistake (I have dozens databases

here, so it's easy to do so in such different timeframes), let's repeat

all operations in one session:

in pgsql dir, executing "du -h --max-depth 1" results:

8,6G ./data

0 ./backups

8,6G .

Executing query

SELECT pg_size_pretty(pg_database_size('mydatabase'));

pg_size_pretty

----------------

7234 MB

Executing query

SELECT pg_size_pretty(pg_relation_size('notafiscalarq'));

pg_size_pretty

----------------

52 MB

Executing query

SELECT nspname || '.' || relname AS "relation",

pg_size_pretty(pg_relation_size(C.oid)) AS "size"

FROM pg_class C

LEFT JOIN pg_namespace N ON (N.oid = C.relnamespace)

WHERE nspname NOT IN ('pg_catalog', 'information_schema')

ORDER BY pg_relation_size(C.oid) DESC

LIMIT 10;

relation | size

---------------------------------+---------

pg_toast.pg_toast_18409 | 4976 MB

pg_toast.pg_toast_18146 | 290 MB

public.cotacao | 229 MB

public.elementocalculado | 179 MB

public.cotacaotransicaosituacao | 155 MB

public.log | 112 MB

public.logradouro | 82 MB

public.cotacaonf | 60 MB

public.notafiscal | 60 MB

public.tabelacalculada | 60 MB

(10 registros)

SELECT nspname || '.' || relname AS "relation",

pg_size_pretty(pg_total_relation_size(C.oid)) AS "total_size"

FROM pg_class C

LEFT JOIN pg_namespace N ON (N.oid = C.relnamespace)

WHERE nspname NOT IN ('pg_catalog', 'information_schema')

AND C.relkind <> 'i'

AND nspname !~ '^pg_toast'

ORDER BY pg_total_relation_size(C.oid) DESC

LIMIT 10;

relation | total_size

---------------------------------+------------

public.notafiscalarq | 5102 MB

public.cotacao | 331 MB

public.elementocalculado | 312 MB

public.documentotransportearq | 294 MB

public.cotacaotransicaosituacao | 233 MB

public.log | 196 MB

public.logradouro | 149 MB

public.cotacaonf | 118 MB

public.tabelacalculada | 116 MB

public.notafiscal | 94 MB

(10 registros)

Looking at PgAdmin 14, I get the following data for table notafiscalarq:

Table Size 52 MB

Toast Table Size 5033 MB

Indexes Size 15 MB

Executing query

SELECT relkind, oid, relfilenode, reltoastrelid,

relpages, reltuples

FROM pg_class

ORDER BY relpages DESC

LIMIT 10;

results

relkind | oid | relfilenode | reltoastrelid | relpages | reltuples

---------+--------+-------------+---------------+----------+-----------

r | 18064 | 18064 | 18086 | 29332 | 639639

r | 18179 | 18179 | 0 | 22797 | 1.811e+06

r | 18116 | 18116 | 18121 | 19772 | 724370

r | 18343 | 18343 | 18347 | 14311 | 928633

r | 18352 | 18352 | 0 | 10488 | 917134

r | 18092 | 18092 | 0 | 7691 | 640709

r | 18396 | 18396 | 18404 | 7670 | 172791

r | 18558 | 18558 | 0 | 7608 | 386907

i | 747805 | 747805 | 0 | 6976 | 1.811e+06

r | 18409 | 18409 | 18413 | 6684 | 715084

Executing query

show block_size

block_size

------------

8192

So, all of this information was get using unique database session, so

they must related to same database and data files

Regards,

Edson

Edson Richter <edsonrichter@hotmail.com> wrote:

> SELECT pg_size_pretty(pg_database_size('mydatabase'));

> pg_size_pretty

> ----------------

> 7234 MB

> SELECT nspname || '.' || relname AS "relation",

> pg_size_pretty(pg_relation_size(C.oid)) AS "size"

> FROM pg_class C

> LEFT JOIN pg_namespace N ON (N.oid = C.relnamespace)

> WHERE nspname NOT IN ('pg_catalog', 'information_schema')

> ORDER BY pg_relation_size(C.oid) DESC

> LIMIT 10;

> relation | size

> ---------------------------------+---------

> pg_toast.pg_toast_18409 | 4976 MB

> pg_toast.pg_toast_18146 | 290 MB

> [ ... ]

> SELECT relkind, oid, relfilenode, reltoastrelid,

> relpages, reltuples

> FROM pg_class

> ORDER BY relpages DESC

> LIMIT 10;

>

> results

> relkind | oid | relfilenode | reltoastrelid | relpages |

> reltuples

>

---------+--------+-------------+---------------+----------+------

> -----

> r | 18064 | 18064 | 18086 | 29332 |

> 639639

> r | 18179 | 18179 | 0 | 22797 |

> 1.811e+06

> r | 18116 | 18116 | 18121 | 19772 |

> 724370

> r | 18343 | 18343 | 18347 | 14311 |

> 928633

> r | 18352 | 18352 | 0 | 10488 |

> 917134

> r | 18092 | 18092 | 0 | 7691 |

> 640709

> r | 18396 | 18396 | 18404 | 7670 |

> 172791

> r | 18558 | 18558 | 0 | 7608 |

> 386907

> i | 747805 | 747805 | 0 | 6976 |

> 1.811e+06

> r | 18409 | 18409 | 18413 | 6684 |

> 715084

When I run that query on a big database here, my top three entries

are for relkind of 't' (starting with a 2TB TOAST table for our

document images) and number four is a TOAST index. It's hard to see

why you don't have TOAST entries at the top of your list. Instead

of a VACUUM FULL, could you try a VACUUM FREEZE VERBOSE ANALYZE

against the full database (using a database superuser login) and

capture the output? Please post the portion of the output for the

big table and its TOAST table, and see whether the numbers

(pg_class.relpages * 8KB versus pg_relation_size()) start to match

up.

You might also want to confirm that neither pg_stat_activity nor

pg_prepared_xacts shows any lingering transactions started more than

a few minutes ago.

> So, all of this information was get using unique database session,

> so they must related to same database and data files

Thanks, that helps suggest where to look next.

-Kevin

Em 12/09/2012 15:09, Kevin Grittner escreveu: > VACUUM FREEZE VERBOSE ANALYZE Sorry, most of the messages are in portuguese, but I guess numbers are more important, right? INFO: índice "pk_notafiscalarq" agora contém 715084 versões de registros em 1963 páginas DETALHE: 0 versões de registros de índices foram apagadas. 0 páginas de índice foram removidas, 0 são reutilizáveis. CPU 0.00s/0.00u sec elapsed 0.12 sec. INFO: "notafiscalarq": encontrados 0 versões de registros removíveis e 715084 não-removíveis em 6684 de 6684 páginas DETALHE: 0 versões de registros não vigentes não podem ser removidas ainda. Havia 0 ponteiros de itens não utilizados. 0 páginas estão completamente vazias. CPU 0.03s/0.04u sec elapsed 0.38 sec. INFO: limpando "pg_toast.pg_toast_18409" INFO: índice "pg_toast_18409_index" agora contém 2643728 versões de registros em 7251 páginas DETALHE: 0 versões de registros de índices foram apagadas. 0 páginas de índice foram removidas, 0 são reutilizáveis. CPU 0.00s/0.00u sec elapsed 0.10 sec. INFO: "pg_toast_18409": encontrados 0 versões de registros removíveis e 2643728 não-removíveis em 636949 de 636949 páginas DETALHE: 0 versões de registros não vigentes não podem ser removidas ainda. Havia 0 ponteiros de itens não utilizados. 0 páginas estão completamente vazias. CPU 2.45s/1.30u sec elapsed 30.16 sec. INFO: analisando "public.notafiscalarq" INFO: "notafiscalarq": processados 6684 de 6684 páginas, contendo 715084 registros vigentes e 0 registros não vigentes; 30000 registros amostrados, 715084 registros totais estimados Thanks, Edson

Em 12/09/2012 21:50, Edson Richter escreveu: > Em 12/09/2012 15:09, Kevin Grittner escreveu: >> VACUUM FREEZE VERBOSE ANALYZE > Sorry, most of the messages are in portuguese, but I guess numbers are > more important, right? > > INFO: índice "pk_notafiscalarq" agora contém 715084 versões de > registros em 1963 páginas > DETALHE: 0 versões de registros de índices foram apagadas. > 0 páginas de índice foram removidas, 0 são reutilizáveis. > CPU 0.00s/0.00u sec elapsed 0.12 sec. > INFO: "notafiscalarq": encontrados 0 versões de registros removíveis > e 715084 não-removíveis em 6684 de 6684 páginas > DETALHE: 0 versões de registros não vigentes não podem ser removidas > ainda. > Havia 0 ponteiros de itens não utilizados. > 0 páginas estão completamente vazias. > CPU 0.03s/0.04u sec elapsed 0.38 sec. > INFO: limpando "pg_toast.pg_toast_18409" > INFO: índice "pg_toast_18409_index" agora contém 2643728 versões de > registros em 7251 páginas > DETALHE: 0 versões de registros de índices foram apagadas. > 0 páginas de índice foram removidas, 0 são reutilizáveis. > CPU 0.00s/0.00u sec elapsed 0.10 sec. > INFO: "pg_toast_18409": encontrados 0 versões de registros removíveis > e 2643728 não-removíveis em 636949 de 636949 páginas > DETALHE: 0 versões de registros não vigentes não podem ser removidas > ainda. > Havia 0 ponteiros de itens não utilizados. > 0 páginas estão completamente vazias. > CPU 2.45s/1.30u sec elapsed 30.16 sec. > INFO: analisando "public.notafiscalarq" > INFO: "notafiscalarq": processados 6684 de 6684 páginas, contendo > 715084 registros vigentes e 0 registros não vigentes; 30000 registros > amostrados, 715084 registros totais estimados > > > Thanks, > > Edson > > Anything else I can do from here? Edson

Edson Richter <edsonrichter@hotmail.com> wrote: > Anything else I can do from here? Did that result in more accurate numbers for pg_class.reltuples? -Kevin

Em 13/09/2012 16:12, Kevin Grittner escreveu:

> Edson Richter <edsonrichter@hotmail.com> wrote:

>

>> Anything else I can do from here?

>

> Did that result in more accurate numbers for pg_class.reltuples?

>

> -Kevin

>

I don't how number were not accurate - for me they always seemed

consistent with what I knew about it...

Let's repeat all tests again (see, data grows on daily basis, so numbers

will be a bit different - yes, I've run the vacuum again):

SELECT pg_size_pretty(pg_database_size('mydatabase'));

pg_size_pretty

----------------

7238 MB

SELECT pg_size_pretty(pg_relation_size('notafiscalarq'));

pg_size_pretty

----------------

52 MB

SELECT nspname || '.' || relname AS "relation",

pg_size_pretty(pg_relation_size(C.oid)) AS "size"

FROM pg_class C

LEFT JOIN pg_namespace N ON (N.oid = C.relnamespace)

WHERE nspname NOT IN ('pg_catalog', 'information_schema')

ORDER BY pg_relation_size(C.oid) DESC

LIMIT 10;

relation | size

---------------------------------+---------

pg_toast.pg_toast_18409 | 4976 MB

pg_toast.pg_toast_18146 | 290 MB

public.cotacao | 229 MB

public.elementocalculado | 179 MB

public.cotacaotransicaosituacao | 155 MB

public.log | 112 MB

public.logradouro | 82 MB

public.cotacaonf | 60 MB

public.notafiscal | 60 MB

public.tabelacalculada | 60 MB

SELECT nspname || '.' || relname AS "relation",

pg_size_pretty(pg_total_relation_size(C.oid)) AS "total_size"

FROM pg_class C

LEFT JOIN pg_namespace N ON (N.oid = C.relnamespace)

WHERE nspname NOT IN ('pg_catalog', 'information_schema')

AND C.relkind <> 'i'

AND nspname !~ '^pg_toast'

ORDER BY pg_total_relation_size(C.oid) DESC

LIMIT 10;

relation | total_size

---------------------------------+------------

public.notafiscalarq | 5102 MB

public.cotacao | 331 MB

public.elementocalculado | 313 MB

public.documentotransportearq | 294 MB

public.cotacaotransicaosituacao | 233 MB

public.log | 196 MB

public.logradouro | 149 MB

public.cotacaonf | 118 MB

public.tabelacalculada | 116 MB

public.notafiscal | 94 MB

SELECT relkind, oid, relfilenode, reltoastrelid,

relpages, reltuples

FROM pg_class

ORDER BY relpages DESC

LIMIT 10;

relkind | oid | relfilenode | reltoastrelid | relpages | reltuples

---------+-------+-------------+---------------+----------+-------------

t | 18413 | 18413 | 0 | 636949 |

2.64373e+06

t | 18150 | 18150 | 0 | 37086 |

149502

r | 18064 | 18064 | 18086 | 29347 | 639695

r | 18179 | 18179 | 0 | 22901 |

1.8172e+06

r | 18116 | 18116 | 18121 | 19779 | 724619

r | 18343 | 18343 | 18347 | 14325 | 928805

r | 18352 | 18352 | 0 | 10488 |

917134

r | 18092 | 18092 | 0 | 7695 |

640804

r | 18396 | 18396 | 18404 | 7671 | 172792

r | 18558 | 18558 | 0 | 7644 |

388332

show block_size;

block_size

------------

8192

Regards,

Edson

On Tue, Sep 11, 2012 at 9:34 AM, Edson Richter <edsonrichter@hotmail.com> wrote:

>

> No, there is no problem. Just trying to reduce database size forcing these

> fields to compress.

> Actual database size = 8Gb

> Backup size = 1.6Gb (5x smaller)

>

> Seems to me (IMHO) that there is room for improvement in database storage

> (we don't have many indexes, and biggest tables are just the ones with bytea

> fields). That's why I've asked for experts counseling.

There are two things to keep in mind. One is that each datum is

compressed separately, so that a lot of redundancy that occurs between

fields of different tuples, but not within any given tuple, will not

be available to TOAST, but will be available to the compression of a

dump file.

Another thing is that PG's TOAST compression was designed to be simple

and fast and patent free, and often it is not all that good. It is

quite good if you have long stretches of repeats of a single

character, or exact densely spaced repeats of a sequence of characters

("123123123123123..."), but when the redundancy is less simple it does

a much worse job than gzip, for example, does.

It is possible but unlikely there is a bug somewhere, but most likely

your documents just aren't very compressible using pglz_compress.

Cheers,

Jeff

Em 17/09/2012 00:17, Jeff Janes escreveu:

> On Tue, Sep 11, 2012 at 9:34 AM, Edson Richter <edsonrichter@hotmail.com> wrote:

>> No, there is no problem. Just trying to reduce database size forcing these

>> fields to compress.

>> Actual database size = 8Gb

>> Backup size = 1.6Gb (5x smaller)

>>

>> Seems to me (IMHO) that there is room for improvement in database storage

>> (we don't have many indexes, and biggest tables are just the ones with bytea

>> fields). That's why I've asked for experts counseling.

> There are two things to keep in mind. One is that each datum is

> compressed separately, so that a lot of redundancy that occurs between

> fields of different tuples, but not within any given tuple, will not

> be available to TOAST, but will be available to the compression of a

> dump file.

>

> Another thing is that PG's TOAST compression was designed to be simple

> and fast and patent free, and often it is not all that good. It is

> quite good if you have long stretches of repeats of a single

> character, or exact densely spaced repeats of a sequence of characters

> ("123123123123123..."), but when the redundancy is less simple it does

> a much worse job than gzip, for example, does.

>

> It is possible but unlikely there is a bug somewhere, but most likely

> your documents just aren't very compressible using pglz_compress.

>

> Cheers,

>

> Jeff

Most of data is XML (few are PDF).

Probably, the best solution for me is to compress before sending to

database.

Thanks for the info.

Regards,

Edson.