Обсуждение: remaining sql/json patches

Hello.

I'm starting a new thread for $subject per Alvaro's suggestion at [1].

So the following sql/json things still remain to be done:

* sql/json query functions:

json_exists()

json_query()

json_value()

* other sql/json functions:

json()

json_scalar()

json_serialize()

* finally:

json_table

Attached is the rebased patch for the 1st part.

It also addresses Alvaro's review comments on Apr 4, though see my

comments below.

On Tue, Apr 4, 2023 at 9:36 PM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote:

> On 2023-Apr-04, Amit Langote wrote:

> > On Tue, Apr 4, 2023 at 2:16 AM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote:

> > > - the gram.y solution to the "ON ERROR/ON EMPTY" clauses is quite ugly.

> > > I think we could make that stuff use something similar to

> > > ConstraintAttributeSpec with an accompanying post-processing function.

> > > That would reduce the number of ad-hoc hacks, which seem excessive.

>>

> > Do you mean the solution involving the JsonBehavior node?

>

> Right. It has spilled as the separate on_behavior struct in the core

> parser %union in addition to the raw jsbehavior, which is something

> we've gone 30 years without having, and I don't see why we should start

> now.

I looked into trying to make this look like ConstraintAttributeSpec

but came to the conclusion that that's not quite doable in this case.

A "behavior" cannot be represented simply as an integer flag, because

there's `DEFAULT a_expr` to fit in, so it's got to be this

JsonBehavior node. However...

> This stuff is terrible:

>

> json_exists_error_clause_opt:

> json_exists_error_behavior ON ERROR_P { $$ = $1; }

> | /* EMPTY */ { $$ = NULL; }

> ;

>

> json_exists_error_behavior:

> ERROR_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_ERROR, NULL); }

> | TRUE_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_TRUE, NULL); }

> | FALSE_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_FALSE, NULL); }

> | UNKNOWN { $$ = makeJsonBehavior(JSON_BEHAVIOR_UNKNOWN, NULL); }

> ;

>

> json_value_behavior:

> NULL_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_NULL, NULL); }

> | ERROR_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_ERROR, NULL); }

> | DEFAULT a_expr { $$ = makeJsonBehavior(JSON_BEHAVIOR_DEFAULT, $2); }

> ;

>

> json_value_on_behavior_clause_opt:

> json_value_behavior ON EMPTY_P

> { $$.on_empty = $1; $$.on_error = NULL; }

> | json_value_behavior ON EMPTY_P json_value_behavior ON ERROR_P

> { $$.on_empty = $1; $$.on_error = $4; }

> | json_value_behavior ON ERROR_P

> { $$.on_empty = NULL; $$.on_error = $1; }

> | /* EMPTY */

> { $$.on_empty = NULL; $$.on_error = NULL; }

> ;

>

> json_query_behavior:

> ERROR_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_ERROR, NULL); }

> | NULL_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_NULL, NULL); }

> | EMPTY_P ARRAY { $$ = makeJsonBehavior(JSON_BEHAVIOR_EMPTY_ARRAY, NULL); }

> /* non-standard, for Oracle compatibility only */

> | EMPTY_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_EMPTY_ARRAY, NULL); }

> | EMPTY_P OBJECT_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_EMPTY_OBJECT, NULL); }

> | DEFAULT a_expr { $$ = makeJsonBehavior(JSON_BEHAVIOR_DEFAULT, $2); }

> ;

>

> json_query_on_behavior_clause_opt:

> json_query_behavior ON EMPTY_P

> { $$.on_empty = $1; $$.on_error = NULL; }

> | json_query_behavior ON EMPTY_P json_query_behavior ON ERROR_P

> { $$.on_empty = $1; $$.on_error = $4; }

> | json_query_behavior ON ERROR_P

> { $$.on_empty = NULL; $$.on_error = $1; }

> | /* EMPTY */

> { $$.on_empty = NULL; $$.on_error = NULL; }

> ;

>

> Surely this can be made cleaner.

...I've managed to reduce the above down to:

MergeWhenClause *mergewhen;

struct KeyActions *keyactions;

struct KeyAction *keyaction;

+ JsonBehavior *jsbehavior;

...

+%type <jsbehavior> json_value_behavior

+ json_query_behavior

+ json_exists_behavior

...

+json_query_behavior:

+ ERROR_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_ERROR, NULL); }

+ | NULL_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_NULL, NULL); }

+ | DEFAULT a_expr { $$ =

makeJsonBehavior(JSON_BEHAVIOR_DEFAULT, $2); }

+ | EMPTY_P ARRAY { $$ =

makeJsonBehavior(JSON_BEHAVIOR_EMPTY_ARRAY, NULL); }

+ | EMPTY_P OBJECT_P { $$ =

makeJsonBehavior(JSON_BEHAVIOR_EMPTY_OBJECT, NULL); }

+ /* non-standard, for Oracle compatibility only */

+ | EMPTY_P { $$ =

makeJsonBehavior(JSON_BEHAVIOR_EMPTY_ARRAY, NULL); }

+ ;

+

+json_exists_behavior:

+ ERROR_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_ERROR, NULL); }

+ | TRUE_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_TRUE, NULL); }

+ | FALSE_P { $$ =

makeJsonBehavior(JSON_BEHAVIOR_FALSE, NULL); }

+ | UNKNOWN { $$ =

makeJsonBehavior(JSON_BEHAVIOR_UNKNOWN, NULL); }

+ ;

+

+json_value_behavior:

+ NULL_P { $$ = makeJsonBehavior(JSON_BEHAVIOR_NULL, NULL); }

+ | ERROR_P { $$ =

makeJsonBehavior(JSON_BEHAVIOR_ERROR, NULL); }

+ | DEFAULT a_expr { $$ =

makeJsonBehavior(JSON_BEHAVIOR_DEFAULT, $2); }

+ ;

Though, that does mean that there are now more rules for

func_expr_common_subexpr to implement the variations of ON ERROR, ON

EMPTY clauses for each of JSON_EXISTS, JSON_QUERY, and JSON_VALUE.

> By the way -- that comment about clauses being non-standard, can you

> spot exactly *which* clauses that comment applies to?

I've moved comment as shown above to make clear that a bare EMPTY_P is

needed for Oracle compatibility

On Tue, Apr 4, 2023 at 2:16 AM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote:

> - the changes in formatting.h have no explanation whatsoever. At the

> very least, the new function should have a comment in the .c file.

> (And why is it at end of file? I bet there's a better location)

Apparently, the newly exported routine is needed in the JSON-specific

subroutine for the planner's contain_mutable_functions_walker(), to

check if a JsonExpr's path_spec contains any timezone-dependent

constant. In the attached, I've changed the newly exported function's

name as follows:

datetime_format_flags -> datetime_format_has_tz

which let me do away with exporting those DCH_* constants in formatting.h.

> - some nasty hacks are being used in the ECPG grammar with no tests at

> all. It's easy to add a few lines to the .pgc file I added in prior

> commits.

Ah, those ecpg.trailer changes weren't in the original commit that

added added SQL/JSON query functions (1a36bc9dba8ea), but came in

5f0adec2537d, 83f1c7b742e8 to fix some damage caused by the former's

making STRING a keyword. If I don't include the ecpg.trailer bit,

test_informix.pgc fails, so I think the change is already covered.

> - Some functions in jsonfuncs.c have changed from throwing hard errors

> into soft ones. I think this deserves more commentary.

I've merged the delta patch I had posted earlier addressing this [2]

into the attached.

> - func.sgml: The new functions are documented in a separate table for no

> reason that I can see. Needs to be merged into one of the existing

> tables. I didn't actually review the docs.

Hmm, so we already have "SQL/JSON Testing Functions" that were

committed into v16 in a separate table (Table 9.48) under "9.16.1.

Processing and Creating JSON Data". So, I don't see a problem with

adding "SQL/JSON Query Functions" in a separate table, though maybe it

should not be under the same sub-section. Maybe under "9.16.2. The

SQL/JSON Path Language" is more appropriate?

I'll rebase and post the patches for "other sql/json functions" and

"json_table" shortly.

--

Thanks, Amit Langote

EDB: http://www.enterprisedb.com

[1] https://www.postgresql.org/message-id/20230503181732.26hx5ihbdkmzhlyw%40alvherre.pgsql

[2] https://www.postgresql.org/message-id/CA%2BHiwqHGghuFpxE%3DpfUFPT%2BZzKvHWSN4BcrWr%3DZRjd4i4qubfQ%40mail.gmail.com

Вложения

On Mon, Jun 19, 2023 at 5:31 PM Amit Langote <amitlangote09@gmail.com> wrote: > So the following sql/json things still remain to be done: > > * sql/json query functions: > json_exists() > json_query() > json_value() > > * other sql/json functions: > json() > json_scalar() > json_serialize() > > * finally: > json_table > > Attached is the rebased patch for the 1st part. ... > I'll rebase and post the patches for "other sql/json functions" and > "json_table" shortly. And here they are. I realized that the patch for the "other sql/json functions" part is relatively straightforward and has no dependence on the "sql/json query functions" part getting done first. So I've made that one the 0001 patch. The patch I posted in the last email is now 0002, though it only has changes related to changing the order of the patch, so I decided not to change the patch version marker (v1). -- Thanks, Amit Langote EDB: http://www.enterprisedb.com

Вложения

On 21.06.23 10:25, Amit Langote wrote: > I realized that the patch for the "other sql/json functions" part is > relatively straightforward and has no dependence on the "sql/json > query functions" part getting done first. So I've made that one the > 0001 patch. The patch I posted in the last email is now 0002, though > it only has changes related to changing the order of the patch, so I > decided not to change the patch version marker (v1). (I suggest you change the version number anyway, next time. There are plenty of numbers available.) The 0001 patch contains a change to doc/src/sgml/keywords/sql2016-02-reserved.txt, which seems inappropriate. The additional keywords are already listed in the 2023 file, and they are not SQL:2016 keywords. Another thing, I noticed that the SQL/JSON patches in PG16 introduced some nonstandard indentation in gram.y. I would like to apply the attached patch to straighten this out.

Вложения

On Fri, Jul 7, 2023 at 8:31 PM Peter Eisentraut <peter@eisentraut.org> wrote: > On 21.06.23 10:25, Amit Langote wrote: > > I realized that the patch for the "other sql/json functions" part is > > relatively straightforward and has no dependence on the "sql/json > > query functions" part getting done first. So I've made that one the > > 0001 patch. The patch I posted in the last email is now 0002, though > > it only has changes related to changing the order of the patch, so I > > decided not to change the patch version marker (v1). > > (I suggest you change the version number anyway, next time. There are > plenty of numbers available.) Will do. :) > The 0001 patch contains a change to > doc/src/sgml/keywords/sql2016-02-reserved.txt, which seems > inappropriate. The additional keywords are already listed in the 2023 > file, and they are not SQL:2016 keywords. Ah, indeed. Will remove. > Another thing, I noticed that the SQL/JSON patches in PG16 introduced > some nonstandard indentation in gram.y. I would like to apply the > attached patch to straighten this out. Sounds fine to me. -- Thanks, Amit Langote EDB: http://www.enterprisedb.com

On Fri, Jul 7, 2023 at 8:59 PM Amit Langote <amitlangote09@gmail.com> wrote: > On Fri, Jul 7, 2023 at 8:31 PM Peter Eisentraut <peter@eisentraut.org> wrote: > > On 21.06.23 10:25, Amit Langote wrote: > > > I realized that the patch for the "other sql/json functions" part is > > > relatively straightforward and has no dependence on the "sql/json > > > query functions" part getting done first. So I've made that one the > > > 0001 patch. The patch I posted in the last email is now 0002, though > > > it only has changes related to changing the order of the patch, so I > > > decided not to change the patch version marker (v1). > > > > (I suggest you change the version number anyway, next time. There are > > plenty of numbers available.) > > Will do. :) Here's v2. 0001 and 0002 are new patches for some improvements of the existing code. In the main patches (0003~), I've mainly removed a few nonterminals in favor of new rules in the remaining nonterminals, especially in the JSON_TABLE patch. I've also removed additions to sql2016-02-reserved.txt as Peter suggested. -- Thanks, Amit Langote EDB: http://www.enterprisedb.com

Вложения

Looking at 0001 now. I noticed that it adds JSON, JSON_SCALAR and JSON_SERIALIZE as reserved keywords to doc/src/sgml/keywords/sql2016-02-reserved.txt; but those keywords do not appear in the 2016 standard as reserved. I see that those keywords appear as reserved in sql2023-02-reserved.txt, so I suppose you're covered as far as that goes; you don't need to patch sql2016, and indeed that's the wrong thing to do. I see that you add json_returning_clause_opt, but we already have json_output_clause_opt. Shouldn't these two be one and the same? I think the new name is more sensible than the old one, since the governing keyword is RETURNING; I suppose naming it "output" comes from the fact that the standard calls this <JSON output clause>. typo "requeted" I'm not in love with the fact that JSON and JSONB have pretty much parallel type categorizing functionality. It seems entirely artificial. Maybe this didn't matter when these were contained inside each .c file and nobody else had to deal with that, but I think it's not good to make this an exported concept. Is it possible to do away with that? I mean, reduce both to a single categorization enum, and a single categorization API. Here you have to cast the enum value to int in order to make ExecInitExprRec work, and that seems a bit lame; moreso when the "is_jsonb" is determined separately (cf. ExecEvalJsonConstructor) In the 2023 standard, JSON_SCALAR is just <JSON scalar> ::= JSON_SCALAR <left paren> <value expression> <right paren> but we seem to have added a <JSON output format> clause to it. Should we really? -- Álvaro Herrera Breisgau, Deutschland — https://www.EnterpriseDB.com/ "Entristecido, Wutra (canción de Las Barreras) echa a Freyr a rodar y a nosotros al mar"

Hi Alvaro,

On Fri, Jul 7, 2023 at 9:28 PM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote:

> Looking at 0001 now.

Thanks.

> I noticed that it adds JSON, JSON_SCALAR and JSON_SERIALIZE as reserved

> keywords to doc/src/sgml/keywords/sql2016-02-reserved.txt; but those

> keywords do not appear in the 2016 standard as reserved. I see that

> those keywords appear as reserved in sql2023-02-reserved.txt, so I

> suppose you're covered as far as that goes; you don't need to patch

> sql2016, and indeed that's the wrong thing to do.

Yeah, fixed that after Peter pointed it out.

> I see that you add json_returning_clause_opt, but we already have

> json_output_clause_opt. Shouldn't these two be one and the same?

> I think the new name is more sensible than the old one, since the

> governing keyword is RETURNING; I suppose naming it "output" comes from

> the fact that the standard calls this <JSON output clause>.

One difference between the two is that json_output_clause_opt allows

specifying the FORMAT clause in addition to the RETURNING type name,

while json_returning_clause_op only allows specifying the type name.

I'm inclined to keep only json_returning_clause_opt as you suggest and

make parse_expr.c output an error if the FORMAT clause is specified in

JSON() and JSON_SCALAR(), so turning the current syntax error on

specifying RETURNING ... FORMAT for these functions into a parsing

error. Done that way in the attached updated patch and also updated

the latter patch that adds JSON_EXISTS() and JSON_VALUE() to have

similar behavior.

> typo "requeted"

Fixed.

> I'm not in love with the fact that JSON and JSONB have pretty much

> parallel type categorizing functionality. It seems entirely artificial.

> Maybe this didn't matter when these were contained inside each .c file

> and nobody else had to deal with that, but I think it's not good to make

> this an exported concept. Is it possible to do away with that? I mean,

> reduce both to a single categorization enum, and a single categorization

> API. Here you have to cast the enum value to int in order to make

> ExecInitExprRec work, and that seems a bit lame; moreso when the

> "is_jsonb" is determined separately (cf. ExecEvalJsonConstructor)

OK, I agree that a unified categorizing API might be better. I'll

look at making this better. Btw, does src/include/common/jsonapi.h

look like an appropriate place for that?

> In the 2023 standard, JSON_SCALAR is just

>

> <JSON scalar> ::= JSON_SCALAR <left paren> <value expression> <right paren>

>

> but we seem to have added a <JSON output format> clause to it. Should

> we really?

Hmm, I am not seeing <JSON output format> in the rule for JSON_SCALAR,

which looks like this in the current grammar:

func_expr_common_subexpr:

...

| JSON_SCALAR '(' a_expr json_returning_clause_opt ')'

{

JsonScalarExpr *n = makeNode(JsonScalarExpr);

n->expr = (Expr *) $3;

n->output = (JsonOutput *) $4;

n->location = @1;

$$ = (Node *) n;

}

...

json_returning_clause_opt:

RETURNING Typename

{

JsonOutput *n = makeNode(JsonOutput);

n->typeName = $2;

n->returning = makeNode(JsonReturning);

n->returning->format =

makeJsonFormat(JS_FORMAT_DEFAULT, JS_ENC_DEFAULT, @2);

$$ = (Node *) n;

}

| /* EMPTY */ { $$ = NULL; }

;

Per what I wrote above, the grammar for JSON() and JSON_SCALAR() does

not allow specifying the FORMAT clause. Though considering what you

wrote, the RETURNING clause does appear to be an extension to the

standard's spec. I can't find any reasoning in the original

discussion as to how that came about, except an email from Andrew [1]

saying that he added it back to the patch.

Here's v3 in the meantime.

--

Thanks, Amit Langote

EDB: http://www.enterprisedb.com

[1] https://www.postgresql.org/message-id/flat/cd0bb935-0158-78a7-08b5-904886deac4b%40postgrespro.ru

Вложения

On 2023-Jul-10, Amit Langote wrote: > > I see that you add json_returning_clause_opt, but we already have > > json_output_clause_opt. Shouldn't these two be one and the same? > > I think the new name is more sensible than the old one, since the > > governing keyword is RETURNING; I suppose naming it "output" comes from > > the fact that the standard calls this <JSON output clause>. > > One difference between the two is that json_output_clause_opt allows > specifying the FORMAT clause in addition to the RETURNING type name, > while json_returning_clause_op only allows specifying the type name. > > I'm inclined to keep only json_returning_clause_opt as you suggest and > make parse_expr.c output an error if the FORMAT clause is specified in > JSON() and JSON_SCALAR(), so turning the current syntax error on > specifying RETURNING ... FORMAT for these functions into a parsing > error. Done that way in the attached updated patch and also updated > the latter patch that adds JSON_EXISTS() and JSON_VALUE() to have > similar behavior. Yeah, that's reasonable. > > I'm not in love with the fact that JSON and JSONB have pretty much > > parallel type categorizing functionality. It seems entirely artificial. > > Maybe this didn't matter when these were contained inside each .c file > > and nobody else had to deal with that, but I think it's not good to make > > this an exported concept. Is it possible to do away with that? I mean, > > reduce both to a single categorization enum, and a single categorization > > API. Here you have to cast the enum value to int in order to make > > ExecInitExprRec work, and that seems a bit lame; moreso when the > > "is_jsonb" is determined separately (cf. ExecEvalJsonConstructor) > > OK, I agree that a unified categorizing API might be better. I'll > look at making this better. Btw, does src/include/common/jsonapi.h > look like an appropriate place for that? Hmm, that header is frontend-available, and the type-category appears to be backend-only, so maybe no. Perhaps jsonfuncs.h is more apropos? execExpr.c is already dealing with array internals, so having to deal with json internals doesn't seem completely out of place. > > In the 2023 standard, JSON_SCALAR is just > > > > <JSON scalar> ::= JSON_SCALAR <left paren> <value expression> <right paren> > > > > but we seem to have added a <JSON output format> clause to it. Should > > we really? > > Hmm, I am not seeing <JSON output format> in the rule for JSON_SCALAR, Agh, yeah, I confused myself, sorry. > Per what I wrote above, the grammar for JSON() and JSON_SCALAR() does > not allow specifying the FORMAT clause. Though considering what you > wrote, the RETURNING clause does appear to be an extension to the > standard's spec. Hmm, I see that <JSON output clause> (which is RETURNING plus optional FORMAT) appears included in JSON_OBJECT, JSON_ARRAY, JSON_QUERY, JSON_SERIALIZE, JSON_OBJECTAGG, JSON_ARRAYAGG. It's not necessarily a bad thing to have it in other places, but we should consider it carefully. Do we really want/need it in JSON() and JSON_SCALAR()? -- Álvaro Herrera 48°01'N 7°57'E — https://www.EnterpriseDB.com/

I forgot to add: * 0001 looks an obvious improvement. You could just push it now, to avoid carrying it forward anymore. I would just put the constructName ahead of value expr in the argument list, though. * 0002: I have no idea what this is (though I probably should). I would also push it right away -- if anything, so that we figure out sooner that it was actually needed in the first place. Or maybe you just need the right test cases? -- Álvaro Herrera 48°01'N 7°57'E — https://www.EnterpriseDB.com/

On Mon, Jul 10, 2023 at 11:52 PM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote: > I forgot to add: Thanks for the review of these. > * 0001 looks an obvious improvement. You could just push it now, to > avoid carrying it forward anymore. I would just put the constructName > ahead of value expr in the argument list, though. Sure, that makes sense. > * 0002: I have no idea what this is (though I probably should). I would > also push it right away -- if anything, so that we figure out sooner > that it was actually needed in the first place. Or maybe you just need > the right test cases? Hmm, I don't think having or not having CaseTestExpr makes a difference to the result of evaluating JsonValueExpr.format_expr, so there are no test cases to prove one way or the other. After staring at this again for a while, I think I figured out why the CaseTestExpr might have been put there in the first place. It seems to have to do with the fact that JsonValueExpr.raw_expr is currently evaluated independently of JsonValueExpr.formatted_expr and the CaseTestExpr propagates the result of the former to the evaluation of the latter. Actually, formatted_expr is effectively formatting_function(<result-of-raw_expr>), so if we put raw_expr itself into formatted_expr such that it is evaluated as part of evaluating formatted_expr, then there is no need for the CaseTestExpr as the propagator for raw_expr's result. I've expanded the commit message to mention the details. I'll push these tomorrow. On Mon, Jul 10, 2023 at 11:47 PM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote: > On 2023-Jul-10, Amit Langote wrote: > > > I'm not in love with the fact that JSON and JSONB have pretty much > > > parallel type categorizing functionality. It seems entirely artificial. > > > Maybe this didn't matter when these were contained inside each .c file > > > and nobody else had to deal with that, but I think it's not good to make > > > this an exported concept. Is it possible to do away with that? I mean, > > > reduce both to a single categorization enum, and a single categorization > > > API. Here you have to cast the enum value to int in order to make > > > ExecInitExprRec work, and that seems a bit lame; moreso when the > > > "is_jsonb" is determined separately (cf. ExecEvalJsonConstructor) > > > > OK, I agree that a unified categorizing API might be better. I'll > > look at making this better. Btw, does src/include/common/jsonapi.h > > look like an appropriate place for that? > > Hmm, that header is frontend-available, and the type-category appears to > be backend-only, so maybe no. Perhaps jsonfuncs.h is more apropos? > execExpr.c is already dealing with array internals, so having to deal > with json internals doesn't seem completely out of place. OK, attached 0003 does it like that. Essentially, I decided to only keep JsonTypeCategory and json_categorize_type(), with some modifications to accommodate the callers in jsonb.c. > > > In the 2023 standard, JSON_SCALAR is just > > > > > > <JSON scalar> ::= JSON_SCALAR <left paren> <value expression> <right paren> > > > > > > but we seem to have added a <JSON output format> clause to it. Should > > > we really? > > > > Hmm, I am not seeing <JSON output format> in the rule for JSON_SCALAR, > > Agh, yeah, I confused myself, sorry. > > > Per what I wrote above, the grammar for JSON() and JSON_SCALAR() does > > not allow specifying the FORMAT clause. Though considering what you > > wrote, the RETURNING clause does appear to be an extension to the > > standard's spec. > > Hmm, I see that <JSON output clause> (which is RETURNING plus optional > FORMAT) appears included in JSON_OBJECT, JSON_ARRAY, JSON_QUERY, > JSON_SERIALIZE, JSON_OBJECTAGG, JSON_ARRAYAGG. It's not necessarily a > bad thing to have it in other places, but we should consider it > carefully. Do we really want/need it in JSON() and JSON_SCALAR()? I thought that removing that support breaks JSON_TABLE() or something but it doesn't, so maybe we can do without the extension if there's no particular reason it's there in the first place. Maybe Andrew (cc'd) remembers why he decided in [1] to (re-) add the RETURNING clause to JSON() and JSON_SCALAR()? Updated patches, with 0003 being a new refactoring patch, are attached. Patches 0004~ contain a few updates around JsonValueExpr. Specifically, I removed the case for T_JsonValueExpr in transformExprRecurse(), because I realized that JsonValueExpr expressions never appear embedded in other expressions. That allowed me to get rid of some needless refactoring around transformJsonValueExpr() in the patch that adds JSON_VALUE() etc. -- Thanks, Amit Langote EDB: http://www.enterprisedb.com [1] https://www.postgresql.org/message-id/1d44d832-4ea9-1ec9-81e9-bc6b2bd8cc43%40dunslane.net

Вложения

- v4-0003-Unify-JSON-categorize-type-API-and-export-for-ext.patch

- v4-0004-SQL-JSON-functions.patch

- v4-0007-Claim-SQL-standard-compliance-for-SQL-JSON-featur.patch

- v4-0006-JSON_TABLE.patch

- v4-0005-SQL-JSON-query-functions.patch

- v4-0002-Don-t-include-CaseTestExpr-in-JsonValueExpr.forma.patch

- v4-0001-Pass-constructName-to-transformJsonValueExpr.patch

On Wed, Jul 12, 2023 at 6:41 PM Amit Langote <amitlangote09@gmail.com> wrote: > On Mon, Jul 10, 2023 at 11:52 PM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote: > > I forgot to add: > > Thanks for the review of these. > > > * 0001 looks an obvious improvement. You could just push it now, to > > avoid carrying it forward anymore. I would just put the constructName > > ahead of value expr in the argument list, though. > > Sure, that makes sense. > > > * 0002: I have no idea what this is (though I probably should). I would > > also push it right away -- if anything, so that we figure out sooner > > that it was actually needed in the first place. Or maybe you just need > > the right test cases? > > Hmm, I don't think having or not having CaseTestExpr makes a > difference to the result of evaluating JsonValueExpr.format_expr, so > there are no test cases to prove one way or the other. > > After staring at this again for a while, I think I figured out why the > CaseTestExpr might have been put there in the first place. It seems > to have to do with the fact that JsonValueExpr.raw_expr is currently > evaluated independently of JsonValueExpr.formatted_expr and the > CaseTestExpr propagates the result of the former to the evaluation of > the latter. Actually, formatted_expr is effectively > formatting_function(<result-of-raw_expr>), so if we put raw_expr > itself into formatted_expr such that it is evaluated as part of > evaluating formatted_expr, then there is no need for the CaseTestExpr > as the propagator for raw_expr's result. > > I've expanded the commit message to mention the details. > > I'll push these tomorrow. I updated it to make the code in makeJsonConstructorExpr() that *does* need to use a CaseTestExpr a bit more readable. Also, updated the comment above CaseTestExpr to mention this instance of its usage. > On Mon, Jul 10, 2023 at 11:47 PM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote: > > On 2023-Jul-10, Amit Langote wrote: > > > > I'm not in love with the fact that JSON and JSONB have pretty much > > > > parallel type categorizing functionality. It seems entirely artificial. > > > > Maybe this didn't matter when these were contained inside each .c file > > > > and nobody else had to deal with that, but I think it's not good to make > > > > this an exported concept. Is it possible to do away with that? I mean, > > > > reduce both to a single categorization enum, and a single categorization > > > > API. Here you have to cast the enum value to int in order to make > > > > ExecInitExprRec work, and that seems a bit lame; moreso when the > > > > "is_jsonb" is determined separately (cf. ExecEvalJsonConstructor) > > > > > > OK, I agree that a unified categorizing API might be better. I'll > > > look at making this better. Btw, does src/include/common/jsonapi.h > > > look like an appropriate place for that? > > > > Hmm, that header is frontend-available, and the type-category appears to > > be backend-only, so maybe no. Perhaps jsonfuncs.h is more apropos? > > execExpr.c is already dealing with array internals, so having to deal > > with json internals doesn't seem completely out of place. > > OK, attached 0003 does it like that. Essentially, I decided to only > keep JsonTypeCategory and json_categorize_type(), with some > modifications to accommodate the callers in jsonb.c. > > > > > In the 2023 standard, JSON_SCALAR is just > > > > > > > > <JSON scalar> ::= JSON_SCALAR <left paren> <value expression> <right paren> > > > > > > > > but we seem to have added a <JSON output format> clause to it. Should > > > > we really? > > > > > > Hmm, I am not seeing <JSON output format> in the rule for JSON_SCALAR, > > > > Agh, yeah, I confused myself, sorry. > > > > > Per what I wrote above, the grammar for JSON() and JSON_SCALAR() does > > > not allow specifying the FORMAT clause. Though considering what you > > > wrote, the RETURNING clause does appear to be an extension to the > > > standard's spec. > > > > Hmm, I see that <JSON output clause> (which is RETURNING plus optional > > FORMAT) appears included in JSON_OBJECT, JSON_ARRAY, JSON_QUERY, > > JSON_SERIALIZE, JSON_OBJECTAGG, JSON_ARRAYAGG. It's not necessarily a > > bad thing to have it in other places, but we should consider it > > carefully. Do we really want/need it in JSON() and JSON_SCALAR()? > > I thought that removing that support breaks JSON_TABLE() or something > but it doesn't, so maybe we can do without the extension if there's no > particular reason it's there in the first place. Maybe Andrew (cc'd) > remembers why he decided in [1] to (re-) add the RETURNING clause to > JSON() and JSON_SCALAR()? > > Updated patches, with 0003 being a new refactoring patch, are > attached. Patches 0004~ contain a few updates around JsonValueExpr. > Specifically, I removed the case for T_JsonValueExpr in > transformExprRecurse(), because I realized that JsonValueExpr > expressions never appear embedded in other expressions. That allowed > me to get rid of some needless refactoring around > transformJsonValueExpr() in the patch that adds JSON_VALUE() etc. I noticed that 0003 was giving some warnings, which is fixed in the attached updated set of patches. -- Thanks, Amit Langote EDB: http://www.enterprisedb.com

Вложения

- v5-0007-Claim-SQL-standard-compliance-for-SQL-JSON-featur.patch

- v5-0003-Unify-JSON-categorize-type-API-and-export-for-ext.patch

- v5-0004-SQL-JSON-functions.patch

- v5-0006-JSON_TABLE.patch

- v5-0005-SQL-JSON-query-functions.patch

- v5-0001-Pass-constructName-to-transformJsonValueExpr.patch

- v5-0002-Don-t-include-CaseTestExpr-in-JsonValueExpr.forma.patch

On Wed, Jul 12, 2023 at 10:23 PM Amit Langote <amitlangote09@gmail.com> wrote: > On Wed, Jul 12, 2023 at 6:41 PM Amit Langote <amitlangote09@gmail.com> wrote: > > On Mon, Jul 10, 2023 at 11:52 PM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote: > > > I forgot to add: > > > > Thanks for the review of these. > > > > > * 0001 looks an obvious improvement. You could just push it now, to > > > avoid carrying it forward anymore. I would just put the constructName > > > ahead of value expr in the argument list, though. > > > > Sure, that makes sense. > > > > > * 0002: I have no idea what this is (though I probably should). I would > > > also push it right away -- if anything, so that we figure out sooner > > > that it was actually needed in the first place. Or maybe you just need > > > the right test cases? > > > > Hmm, I don't think having or not having CaseTestExpr makes a > > difference to the result of evaluating JsonValueExpr.format_expr, so > > there are no test cases to prove one way or the other. > > > > After staring at this again for a while, I think I figured out why the > > CaseTestExpr might have been put there in the first place. It seems > > to have to do with the fact that JsonValueExpr.raw_expr is currently > > evaluated independently of JsonValueExpr.formatted_expr and the > > CaseTestExpr propagates the result of the former to the evaluation of > > the latter. Actually, formatted_expr is effectively > > formatting_function(<result-of-raw_expr>), so if we put raw_expr > > itself into formatted_expr such that it is evaluated as part of > > evaluating formatted_expr, then there is no need for the CaseTestExpr > > as the propagator for raw_expr's result. > > > > I've expanded the commit message to mention the details. > > > > I'll push these tomorrow. > > I updated it to make the code in makeJsonConstructorExpr() that *does* > need to use a CaseTestExpr a bit more readable. Also, updated the > comment above CaseTestExpr to mention this instance of its usage. Pushed these two just now. > > On Mon, Jul 10, 2023 at 11:47 PM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote: > > > On 2023-Jul-10, Amit Langote wrote: > > > > > I'm not in love with the fact that JSON and JSONB have pretty much > > > > > parallel type categorizing functionality. It seems entirely artificial. > > > > > Maybe this didn't matter when these were contained inside each .c file > > > > > and nobody else had to deal with that, but I think it's not good to make > > > > > this an exported concept. Is it possible to do away with that? I mean, > > > > > reduce both to a single categorization enum, and a single categorization > > > > > API. Here you have to cast the enum value to int in order to make > > > > > ExecInitExprRec work, and that seems a bit lame; moreso when the > > > > > "is_jsonb" is determined separately (cf. ExecEvalJsonConstructor) > > > > > > > > OK, I agree that a unified categorizing API might be better. I'll > > > > look at making this better. Btw, does src/include/common/jsonapi.h > > > > look like an appropriate place for that? > > > > > > Hmm, that header is frontend-available, and the type-category appears to > > > be backend-only, so maybe no. Perhaps jsonfuncs.h is more apropos? > > > execExpr.c is already dealing with array internals, so having to deal > > > with json internals doesn't seem completely out of place. > > > > OK, attached 0003 does it like that. Essentially, I decided to only > > keep JsonTypeCategory and json_categorize_type(), with some > > modifications to accommodate the callers in jsonb.c. > > > > > > > In the 2023 standard, JSON_SCALAR is just > > > > > > > > > > <JSON scalar> ::= JSON_SCALAR <left paren> <value expression> <right paren> > > > > > > > > > > but we seem to have added a <JSON output format> clause to it. Should > > > > > we really? > > > > > > > > Hmm, I am not seeing <JSON output format> in the rule for JSON_SCALAR, > > > > > > Agh, yeah, I confused myself, sorry. > > > > > > > Per what I wrote above, the grammar for JSON() and JSON_SCALAR() does > > > > not allow specifying the FORMAT clause. Though considering what you > > > > wrote, the RETURNING clause does appear to be an extension to the > > > > standard's spec. > > > > > > Hmm, I see that <JSON output clause> (which is RETURNING plus optional > > > FORMAT) appears included in JSON_OBJECT, JSON_ARRAY, JSON_QUERY, > > > JSON_SERIALIZE, JSON_OBJECTAGG, JSON_ARRAYAGG. It's not necessarily a > > > bad thing to have it in other places, but we should consider it > > > carefully. Do we really want/need it in JSON() and JSON_SCALAR()? > > > > I thought that removing that support breaks JSON_TABLE() or something > > but it doesn't, so maybe we can do without the extension if there's no > > particular reason it's there in the first place. Maybe Andrew (cc'd) > > remembers why he decided in [1] to (re-) add the RETURNING clause to > > JSON() and JSON_SCALAR()? > > > > Updated patches, with 0003 being a new refactoring patch, are > > attached. Patches 0004~ contain a few updates around JsonValueExpr. > > Specifically, I removed the case for T_JsonValueExpr in > > transformExprRecurse(), because I realized that JsonValueExpr > > expressions never appear embedded in other expressions. That allowed > > me to get rid of some needless refactoring around > > transformJsonValueExpr() in the patch that adds JSON_VALUE() etc. > > I noticed that 0003 was giving some warnings, which is fixed in the > attached updated set of patches. Here are the remaining patches, rebased. I'll remove the RETURNING clause from JSON() and JSON_SCALAR() in the next version that I will post tomorrow unless I hear objections. -- Thanks, Amit Langote EDB: http://www.enterprisedb.com

Вложения

I looked at your 0001. My 0001 are some trivial comment cleanups to that. I scrolled through all of jsonfuncs.c to see if there was a better place for the new function than the end of the file. Man, is that one ugly file. There are almost no comments! I almost wish you would create a new file so that you don't have to put this new function in such bad company. But maybe it'll improve someday, so ... whatever. In the original code, the functions here being (re)moved do not need to return a type output function in a few cases. This works okay when the functions are each contained in a single file (because each function knows that the respective datum_to_json/datum_to_jsonb user of the returned values won't need the function OID in those other cases); but as an exported function, that strange API doesn't seem great. (It only works for 0002 because the only thing that the executor does with these cached values is call datum_to_json/b). That seems easy to solve, since we can return the hardcoded output function OID in those cases anyway. A possible complaint about this is that the OID so returned would be untested code, so they might be wrong and we'd never know. However, ISTM it's better to make a promise about always returning a function OID and later fixing any bogus function OID if we ever discover that we return one, rather than having to document in the function's comment that "we only return function OIDs in such and such cases". So I made this change my 0002. A similar complain can be made about which casts we look for. Right now, only an explicit cast to JSON is useful, so that's the only thing we do. But maybe one day a cast to JSONB would become useful if there's no cast to JSON for some datatype (in the is_jsonb case only?); and maybe another type of cast would be useful. However, that seems like going too much into uncharted territory with no useful use case, so let's just not go there for now. Maybe in the future we can improve this aspect of it, if need arises. -- Álvaro Herrera Breisgau, Deutschland — https://www.EnterpriseDB.com/

Вложения

Hi Alvaro, On Fri, Jul 14, 2023 at 1:54 AM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote: > I looked at your 0001. My 0001 are some trivial comment cleanups to > that. Thanks. > I scrolled through all of jsonfuncs.c to see if there was a better place > for the new function than the end of the file. Man, is that one ugly > file. There are almost no comments! I almost wish you would create a > new file so that you don't have to put this new function in such bad > company. But maybe it'll improve someday, so ... whatever. I tried to put it somewhere that is not the end of the file, though anywhere would have looked arbitrary anyway for the reasons you mention, so I didn't after all. > In the original code, the functions here being (re)moved do not need to > return a type output function in a few cases. This works okay when the > functions are each contained in a single file (because each function > knows that the respective datum_to_json/datum_to_jsonb user of the > returned values won't need the function OID in those other cases); but > as an exported function, that strange API doesn't seem great. (It only > works for 0002 because the only thing that the executor does with these > cached values is call datum_to_json/b). Agreed about not tying the new API too closely to datum_to_json[b]'s needs. > That seems easy to solve, since > we can return the hardcoded output function OID in those cases anyway. > A possible complaint about this is that the OID so returned would be > untested code, so they might be wrong and we'd never know. However, > ISTM it's better to make a promise about always returning a function OID > and later fixing any bogus function OID if we ever discover that we > return one, rather than having to document in the function's comment > that "we only return function OIDs in such and such cases". So I made > this change my 0002. +1 > A similar complaint can be made about which casts we look for. Right > now, only an explicit cast to JSON is useful, so that's the only thing > we do. But maybe one day a cast to JSONB would become useful if there's > no cast to JSON for some datatype (in the is_jsonb case only?); and > maybe another type of cast would be useful. However, that seems like > going too much into uncharted territory with no useful use case, so > let's just not go there for now. Maybe in the future we can improve > this aspect of it, if need arises. Hmm, yes, the note in the nearby comment stresses "to json (not to jsonb)", though the (historical) reason why is not so clear to me. I'm inclined to leave that as-is. I've merged your deltas in the attached 0001 and rebased the other patches. In 0002, I have now removed RETURNING support for JSON() and JSON_SCALAR(). -- Thanks, Amit Langote EDB: http://www.enterprisedb.com

Вложения

hi.

seems there is no explanation about, json_api_common_syntax in

functions-json.html

I can get json_query full synopsis from functions-json.html as follows:

json_query ( context_item, path_expression [ PASSING { value AS

varname } [, ...]] [ RETURNING data_type [ FORMAT JSON [ ENCODING UTF8

] ] ] [ { WITHOUT | WITH { CONDITIONAL | [UNCONDITIONAL] } } [ ARRAY ]

WRAPPER ] [ { KEEP | OMIT } QUOTES [ ON SCALAR STRING ] ] [ { ERROR |

NULL | EMPTY { [ ARRAY ] | OBJECT } | DEFAULT expression } ON EMPTY ]

[ { ERROR | NULL | EMPTY { [ ARRAY ] | OBJECT } | DEFAULT expression }

ON ERROR ])

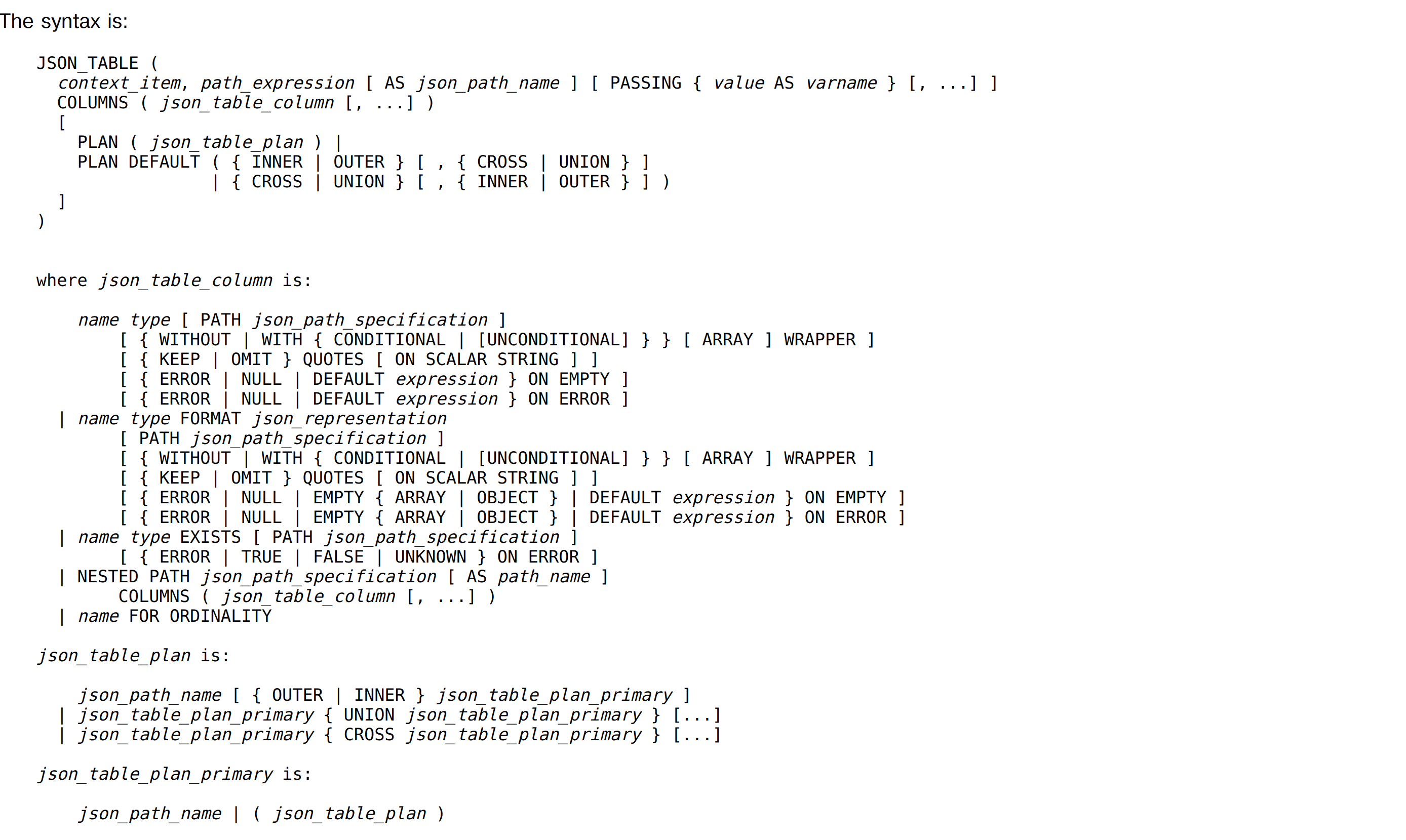

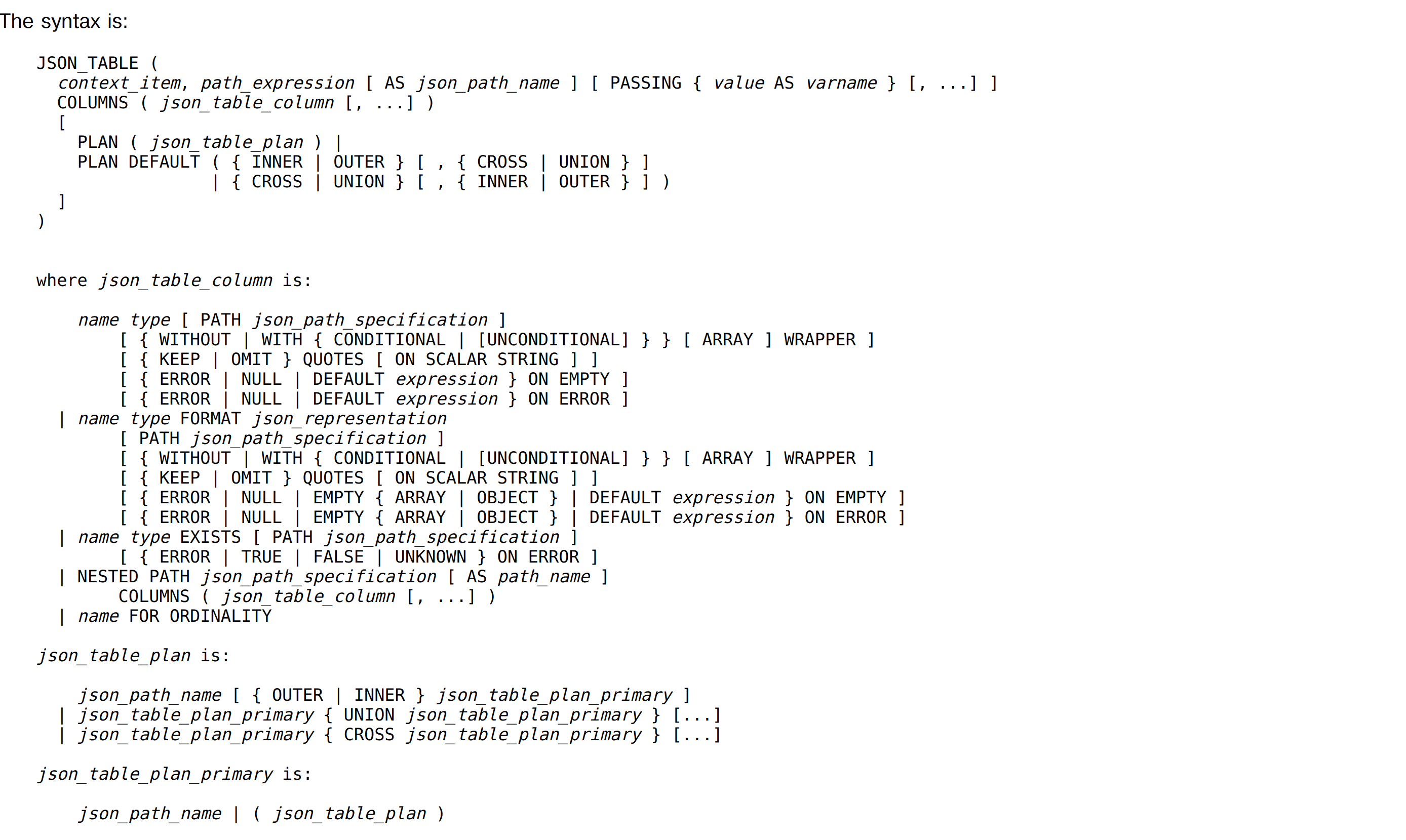

seems doesn't have a full synopsis for json_table? only partial one

by one explanation.

Op 7/17/23 om 07:00 schreef jian he:

> hi.

> seems there is no explanation about, json_api_common_syntax in

> functions-json.html

>

> I can get json_query full synopsis from functions-json.html as follows:

> json_query ( context_item, path_expression [ PASSING { value AS

> varname } [, ...]] [ RETURNING data_type [ FORMAT JSON [ ENCODING UTF8

> ] ] ] [ { WITHOUT | WITH { CONDITIONAL | [UNCONDITIONAL] } } [ ARRAY ]

> WRAPPER ] [ { KEEP | OMIT } QUOTES [ ON SCALAR STRING ] ] [ { ERROR |

> NULL | EMPTY { [ ARRAY ] | OBJECT } | DEFAULT expression } ON EMPTY ]

> [ { ERROR | NULL | EMPTY { [ ARRAY ] | OBJECT } | DEFAULT expression }

> ON ERROR ])

>

> seems doesn't have a full synopsis for json_table? only partial one

> by one explanation.

>

FWIW, Re: json_api_common_syntax

An (old) pdf that I have (ISO/IEC TR 19075-6 First edition 2017-03)

contains the below specification. It's probably the source of the

particular term. It's easy to see how it maps onto the current v7

SQL/JSON implementation. (I don't know if it has changed in later

incarnations.)

------ 8< ------------

5.2 JSON API common syntax

The SQL/JSON query functions all need a path specification, the JSON

value to be input to that path specification for querying and

processing, and optional parameter values passed to the path

specification. They use a common syntax:

<JSON API common syntax> ::=

<JSON context item> <comma> <JSON path specification>

[ AS <JSON table path name> ]

[ <JSON passing clause> ]

<JSON context item> ::=

<JSON value expression>

<JSON path specification> ::=

<character string literal>

<JSON passing clause> ::=

PASSING <JSON argument> [ { <comma> <JSON argument> } ]

<JSON argument> ::=

<JSON value expression> AS <identifier>

------ 8< ------------

And yes, we might need a readable translation of that in the docs

although it might be easier to just get get rid of the term

'json_api_common_syntax'.

HTH,

Erik Rijkers

Hi,

On Mon, Jul 17, 2023 at 4:14 PM Erik Rijkers <er@xs4all.nl> wrote:

> Op 7/17/23 om 07:00 schreef jian he:

> > hi.

> > seems there is no explanation about, json_api_common_syntax in

> > functions-json.html

> >

> > I can get json_query full synopsis from functions-json.html as follows:

> > json_query ( context_item, path_expression [ PASSING { value AS

> > varname } [, ...]] [ RETURNING data_type [ FORMAT JSON [ ENCODING UTF8

> > ] ] ] [ { WITHOUT | WITH { CONDITIONAL | [UNCONDITIONAL] } } [ ARRAY ]

> > WRAPPER ] [ { KEEP | OMIT } QUOTES [ ON SCALAR STRING ] ] [ { ERROR |

> > NULL | EMPTY { [ ARRAY ] | OBJECT } | DEFAULT expression } ON EMPTY ]

> > [ { ERROR | NULL | EMPTY { [ ARRAY ] | OBJECT } | DEFAULT expression }

> > ON ERROR ])

> >

> > seems doesn't have a full synopsis for json_table? only partial one

> > by one explanation.

I looked through the history of the docs portion of the patch and it

looks like the synopsis for JSON_TABLE(...) used to be there but was

taken out during one of the doc reworks [1].

I've added it back in the patch as I agree that it would help to have

it. Though, I am not totally sure where I've put it is the right

place for it. JSON_TABLE() is a beast that won't fit into the table

that JSON_QUERY() et al are in, so maybe that's how it will have to

be? I have no better idea.

> FWIW, Re: json_api_common_syntax

...

> An (old) pdf that I have (ISO/IEC TR 19075-6 First edition 2017-03)

> contains the below specification. It's probably the source of the

> particular term. It's easy to see how it maps onto the current v7

> SQL/JSON implementation. (I don't know if it has changed in later

> incarnations.)

>

>

> ------ 8< ------------

> 5.2 JSON API common syntax

>

> The SQL/JSON query functions all need a path specification, the JSON

> value to be input to that path specification for querying and

> processing, and optional parameter values passed to the path

> specification. They use a common syntax:

>

> <JSON API common syntax> ::=

> <JSON context item> <comma> <JSON path specification>

> [ AS <JSON table path name> ]

> [ <JSON passing clause> ]

>

> <JSON context item> ::=

> <JSON value expression>

>

> <JSON path specification> ::=

> <character string literal>

>

> <JSON passing clause> ::=

> PASSING <JSON argument> [ { <comma> <JSON argument> } ]

>

> <JSON argument> ::=

> <JSON value expression> AS <identifier>

>

> ------ 8< ------------

>

> And yes, we might need a readable translation of that in the docs

> although it might be easier to just get get rid of the term

> 'json_api_common_syntax'.

I found a patch proposed by Andrew Dunstan in the v15 dev cycle to get

rid of the term in the JSON_TABLE docs that Erik seemed to agree with

[2], so I've applied it.

Attached updated patches. In 0002, I removed the mention of the

RETURNING clause in the JSON(), JSON_SCALAR() documentation, which I

had forgotten to do in the last version which removed its support in

code.

I think 0001 looks ready to go. Alvaro?

Also, I've been wondering if it isn't too late to apply the following

to v16 too, so as to make the code look similar in both branches:

b6e1157e7d Don't include CaseTestExpr in JsonValueExpr.formatted_expr

785480c953 Pass constructName to transformJsonValueExpr()

--

Thanks, Amit Langote

EDB: http://www.enterprisedb.com

[1] https://www.postgresql.org/message-id/044204fa-738d-d89a-0e81-1c04696ba676%40dunslane.net

[2] https://www.postgresql.org/message-id/10c997db-9270-bdd5-04d5-0ffc1eefcdb7%40dunslane.net

Вложения

On 2023-Jul-18, Amit Langote wrote: > Attached updated patches. In 0002, I removed the mention of the > RETURNING clause in the JSON(), JSON_SCALAR() documentation, which I > had forgotten to do in the last version which removed its support in > code. > I think 0001 looks ready to go. Alvaro? It looks reasonable to me. > Also, I've been wondering if it isn't too late to apply the following > to v16 too, so as to make the code look similar in both branches: Hmm. > 785480c953 Pass constructName to transformJsonValueExpr() I think 785480c953 can easily be considered a bugfix on 7081ac46ace8, so I agree it's better to apply it to 16. > b6e1157e7d Don't include CaseTestExpr in JsonValueExpr.formatted_expr I feel a bit uneasy about this one. It seems to assume that formatted_expr is always set, but at the same time it's not obvious that it is. (Maybe this aspect just needs some more commentary). I agree that it would be better to make both branches identical, because if there's a problem, we are better equipped to get a fix done to both. As for the removal of makeCaseTestExpr(), I agree -- of the six callers of makeNode(CastTestExpr), only two of them would be able to use the new function, so it doesn't look of general enough usefulness. -- Álvaro Herrera Breisgau, Deutschland — https://www.EnterpriseDB.com/ Y una voz del caos me habló y me dijo "Sonríe y sé feliz, podría ser peor". Y sonreí. Y fui feliz. Y fue peor.

On Wed, Jul 19, 2023 at 12:53 AM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote: > On 2023-Jul-18, Amit Langote wrote: > > > Attached updated patches. In 0002, I removed the mention of the > > RETURNING clause in the JSON(), JSON_SCALAR() documentation, which I > > had forgotten to do in the last version which removed its support in > > code. > > > I think 0001 looks ready to go. Alvaro? > > It looks reasonable to me. Thanks for taking another look. I will push this tomorrow. > > Also, I've been wondering if it isn't too late to apply the following > > to v16 too, so as to make the code look similar in both branches: > > Hmm. > > > 785480c953 Pass constructName to transformJsonValueExpr() > > I think 785480c953 can easily be considered a bugfix on 7081ac46ace8, so > I agree it's better to apply it to 16. OK. > > b6e1157e7d Don't include CaseTestExpr in JsonValueExpr.formatted_expr > > I feel a bit uneasy about this one. It seems to assume that > formatted_expr is always set, but at the same time it's not obvious that > it is. (Maybe this aspect just needs some more commentary). Hmm, I agree that the comments about formatted_expr could be improved further, for which I propose the attached. Actually, staring some more at this, I'm inclined to change makeJsonValueExpr() to allow callers to pass it the finished 'formatted_expr' rather than set it by themselves. > I agree > that it would be better to make both branches identical, because if > there's a problem, we are better equipped to get a fix done to both. > > As for the removal of makeCaseTestExpr(), I agree -- of the six callers > of makeNode(CastTestExpr), only two of them would be able to use the new > function, so it doesn't look of general enough usefulness. OK, so you agree with back-patching this one too, though perhaps only after applying something like the aforementioned patch. Just to be sure, would the good practice in this case be to squash the fixup patch into b6e1157e7d before back-patching? -- Thanks, Amit Langote EDB: http://www.enterprisedb.com

Вложения

On Wed, Jul 19, 2023 at 5:17 PM Amit Langote <amitlangote09@gmail.com> wrote: > On Wed, Jul 19, 2023 at 12:53 AM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote: > > On 2023-Jul-18, Amit Langote wrote: > > > b6e1157e7d Don't include CaseTestExpr in JsonValueExpr.formatted_expr > > > > I feel a bit uneasy about this one. It seems to assume that > > formatted_expr is always set, but at the same time it's not obvious that > > it is. (Maybe this aspect just needs some more commentary). > > Hmm, I agree that the comments about formatted_expr could be improved > further, for which I propose the attached. Actually, staring some > more at this, I'm inclined to change makeJsonValueExpr() to allow > callers to pass it the finished 'formatted_expr' rather than set it by > themselves. Hmm, after looking some more, it may not be entirely right that formatted_expr is always set in the code paths that call transformJsonValueExpr(). Will look at this some more tomorrow. -- Thanks, Amit Langote EDB: http://www.enterprisedb.com

On Tue, Jul 18, 2023 at 5:11 PM Amit Langote <amitlangote09@gmail.com> wrote:

>

> Hi,

>

> On Mon, Jul 17, 2023 at 4:14 PM Erik Rijkers <er@xs4all.nl> wrote:

> > Op 7/17/23 om 07:00 schreef jian he:

> > > hi.

> > > seems there is no explanation about, json_api_common_syntax in

> > > functions-json.html

> > >

> > > I can get json_query full synopsis from functions-json.html as follows:

> > > json_query ( context_item, path_expression [ PASSING { value AS

> > > varname } [, ...]] [ RETURNING data_type [ FORMAT JSON [ ENCODING UTF8

> > > ] ] ] [ { WITHOUT | WITH { CONDITIONAL | [UNCONDITIONAL] } } [ ARRAY ]

> > > WRAPPER ] [ { KEEP | OMIT } QUOTES [ ON SCALAR STRING ] ] [ { ERROR |

> > > NULL | EMPTY { [ ARRAY ] | OBJECT } | DEFAULT expression } ON EMPTY ]

> > > [ { ERROR | NULL | EMPTY { [ ARRAY ] | OBJECT } | DEFAULT expression }

> > > ON ERROR ])

> > >

> > > seems doesn't have a full synopsis for json_table? only partial one

> > > by one explanation.

>

> I looked through the history of the docs portion of the patch and it

> looks like the synopsis for JSON_TABLE(...) used to be there but was

> taken out during one of the doc reworks [1].

>

> I've added it back in the patch as I agree that it would help to have

> it. Though, I am not totally sure where I've put it is the right

> place for it. JSON_TABLE() is a beast that won't fit into the table

> that JSON_QUERY() et al are in, so maybe that's how it will have to

> be? I have no better idea.

>

> >

attached screenshot render json_table syntax almost plain html. It looks fine.

based on syntax, then I am kind of confused with following 2 cases:

--1

SELECT * FROM JSON_TABLE(jsonb '1', '$'

COLUMNS (a int PATH 'strict $.a' default 1 ON EMPTY default 2 on error)

ERROR ON ERROR) jt;

COLUMNS (a int PATH 'strict $.a' default 1 ON EMPTY default 2 on error)

ERROR ON ERROR) jt;

--2

SELECT * FROM JSON_TABLE(jsonb '1', '$'

COLUMNS (a int PATH 'strict $.a' default 1 ON EMPTY default 2 on error)) jt;

the first one should yield syntax error?

SELECT * FROM JSON_TABLE(jsonb '1', '$'

COLUMNS (a int PATH 'strict $.a' default 1 ON EMPTY default 2 on error)) jt;

the first one should yield syntax error?

Вложения

Hello,

On Thu, Jul 20, 2023 at 10:35 AM jian he <jian.universality@gmail.com> wrote:

> On Tue, Jul 18, 2023 at 5:11 PM Amit Langote <amitlangote09@gmail.com> wrote:

> > > Op 7/17/23 om 07:00 schreef jian he:

> > > > hi.

> > > > seems there is no explanation about, json_api_common_syntax in

> > > > functions-json.html

> > > >

> > > > I can get json_query full synopsis from functions-json.html as follows:

> > > > json_query ( context_item, path_expression [ PASSING { value AS

> > > > varname } [, ...]] [ RETURNING data_type [ FORMAT JSON [ ENCODING UTF8

> > > > ] ] ] [ { WITHOUT | WITH { CONDITIONAL | [UNCONDITIONAL] } } [ ARRAY ]

> > > > WRAPPER ] [ { KEEP | OMIT } QUOTES [ ON SCALAR STRING ] ] [ { ERROR |

> > > > NULL | EMPTY { [ ARRAY ] | OBJECT } | DEFAULT expression } ON EMPTY ]

> > > > [ { ERROR | NULL | EMPTY { [ ARRAY ] | OBJECT } | DEFAULT expression }

> > > > ON ERROR ])

> > > >

> > > > seems doesn't have a full synopsis for json_table? only partial one

> > > > by one explanation.

> >

> > I looked through the history of the docs portion of the patch and it

> > looks like the synopsis for JSON_TABLE(...) used to be there but was

> > taken out during one of the doc reworks [1].

> >

> > I've added it back in the patch as I agree that it would help to have

> > it. Though, I am not totally sure where I've put it is the right

> > place for it. JSON_TABLE() is a beast that won't fit into the table

> > that JSON_QUERY() et al are in, so maybe that's how it will have to

> > be? I have no better idea.

>

> attached screenshot render json_table syntax almost plain html. It looks fine.

Thanks for checking.

> based on syntax, then I am kind of confused with following 2 cases:

> --1

> SELECT * FROM JSON_TABLE(jsonb '1', '$'

> COLUMNS (a int PATH 'strict $.a' default 1 ON EMPTY default 2 on error)

> ERROR ON ERROR) jt;

>

> --2

> SELECT * FROM JSON_TABLE(jsonb '1', '$'

> COLUMNS (a int PATH 'strict $.a' default 1 ON EMPTY default 2 on error)) jt;

>

> the first one should yield syntax error?

No. Actually, the synopsis missed the optional ON ERROR clause that

can appear after COLUMNS(...). Will fix it.

--

Thanks, Amit Langote

EDB: http://www.enterprisedb.com

On Wed, Jul 19, 2023 at 5:17 PM Amit Langote <amitlangote09@gmail.com> wrote: > On Wed, Jul 19, 2023 at 12:53 AM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote: > > On 2023-Jul-18, Amit Langote wrote: > > > > > Attached updated patches. In 0002, I removed the mention of the > > > RETURNING clause in the JSON(), JSON_SCALAR() documentation, which I > > > had forgotten to do in the last version which removed its support in > > > code. > > > > > I think 0001 looks ready to go. Alvaro? > > > > It looks reasonable to me. > > Thanks for taking another look. > > I will push this tomorrow. Pushed. > > > Also, I've been wondering if it isn't too late to apply the following > > > to v16 too, so as to make the code look similar in both branches: > > > > Hmm. > > > > > 785480c953 Pass constructName to transformJsonValueExpr() > > > > I think 785480c953 can easily be considered a bugfix on 7081ac46ace8, so > > I agree it's better to apply it to 16. > > OK. Pushed to 16. > > > b6e1157e7d Don't include CaseTestExpr in JsonValueExpr.formatted_expr > > > > I feel a bit uneasy about this one. It seems to assume that > > formatted_expr is always set, but at the same time it's not obvious that > > it is. (Maybe this aspect just needs some more commentary). > > Hmm, I agree that the comments about formatted_expr could be improved > further, for which I propose the attached. Actually, staring some > more at this, I'm inclined to change makeJsonValueExpr() to allow > callers to pass it the finished 'formatted_expr' rather than set it by > themselves. > > > I agree > > that it would be better to make both branches identical, because if > > there's a problem, we are better equipped to get a fix done to both. > > > > As for the removal of makeCaseTestExpr(), I agree -- of the six callers > > of makeNode(CastTestExpr), only two of them would be able to use the new > > function, so it doesn't look of general enough usefulness. > > OK, so you agree with back-patching this one too, though perhaps only > after applying something like the aforementioned patch. I looked at this some more and concluded that it's fine to think that all JsonValueExpr nodes leaving the parser have their formatted_expr set. I've updated the commentary some more in the patch attached as 0001. Rebased SQL/JSON patches also attached. I've fixed the JSON_TABLE syntax synopsis in the documentation as mentioned in my other email. -- Thanks, Amit Langote EDB: http://www.enterprisedb.com

Вложения

On Thu, Jul 20, 2023 at 17:19 Amit Langote <amitlangote09@gmail.com> wrote:

On Wed, Jul 19, 2023 at 5:17 PM Amit Langote <amitlangote09@gmail.com> wrote:

> On Wed, Jul 19, 2023 at 12:53 AM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote:

> > On 2023-Jul-18, Amit Langote wrote:

> >

> > > Attached updated patches. In 0002, I removed the mention of the

> > > RETURNING clause in the JSON(), JSON_SCALAR() documentation, which I

> > > had forgotten to do in the last version which removed its support in

> > > code.

> >

> > > I think 0001 looks ready to go. Alvaro?

> >

> > It looks reasonable to me.

>

> Thanks for taking another look.

>

> I will push this tomorrow.

Pushed.

> > > Also, I've been wondering if it isn't too late to apply the following

> > > to v16 too, so as to make the code look similar in both branches:

> >

> > Hmm.

> >

> > > 785480c953 Pass constructName to transformJsonValueExpr()

> >

> > I think 785480c953 can easily be considered a bugfix on 7081ac46ace8, so

> > I agree it's better to apply it to 16.

>

> OK.

Pushed to 16.

> > > b6e1157e7d Don't include CaseTestExpr in JsonValueExpr.formatted_expr

> >

> > I feel a bit uneasy about this one. It seems to assume that

> > formatted_expr is always set, but at the same time it's not obvious that

> > it is. (Maybe this aspect just needs some more commentary).

>

> Hmm, I agree that the comments about formatted_expr could be improved

> further, for which I propose the attached. Actually, staring some

> more at this, I'm inclined to change makeJsonValueExpr() to allow

> callers to pass it the finished 'formatted_expr' rather than set it by

> themselves.

>

> > I agree

> > that it would be better to make both branches identical, because if

> > there's a problem, we are better equipped to get a fix done to both.

> >

> > As for the removal of makeCaseTestExpr(), I agree -- of the six callers

> > of makeNode(CastTestExpr), only two of them would be able to use the new

> > function, so it doesn't look of general enough usefulness.

>

> OK, so you agree with back-patching this one too, though perhaps only

> after applying something like the aforementioned patch.

I looked at this some more and concluded that it's fine to think that

all JsonValueExpr nodes leaving the parser have their formatted_expr

set. I've updated the commentary some more in the patch attached as

0001.

Rebased SQL/JSON patches also attached. I've fixed the JSON_TABLE

syntax synopsis in the documentation as mentioned in my other email.

I’m thinking of pushing 0001 and 0002 tomorrow barring objections.

Thanks, Amit Langote

EDB: http://www.enterprisedb.com

EDB: http://www.enterprisedb.com

On 2023-Jul-21, Amit Langote wrote: > I’m thinking of pushing 0001 and 0002 tomorrow barring objections. 0001 looks reasonable to me. I think you asked whether to squash that one with the other bugfix commit for the same code that you already pushed to master; I think there's no point in committing as separate patches, because the first one won't show up in the git_changelog output as a single entity with the one in 16, so it'll just be additional noise. I've looked at 0002 at various points in time and I think it looks generally reasonable. I think your removal of a couple of newlines (where originally two appear in sequence) is unwarranted; that the name to_json[b]_worker is ugly for exported functions (maybe "datum_to_json" would be better, or you may have better ideas); and that the omission of the stock comment in the new stanzas in FigureColnameInternal() is strange. But I don't have anything serious. Do add some ecpg tests ... Also, remember to pgindent and bump catversion, if you haven't already. -- Álvaro Herrera 48°01'N 7°57'E — https://www.EnterpriseDB.com/ "No hay hombre que no aspire a la plenitud, es decir, la suma de experiencias de que un hombre es capaz"

Hi Alvaro, Thanks for taking a look. On Fri, Jul 21, 2023 at 1:02 AM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote: > On 2023-Jul-21, Amit Langote wrote: > > > I’m thinking of pushing 0001 and 0002 tomorrow barring objections. > > 0001 looks reasonable to me. I think you asked whether to squash that > one with the other bugfix commit for the same code that you already > pushed to master; I think there's no point in committing as separate > patches, because the first one won't show up in the git_changelog output > as a single entity with the one in 16, so it'll just be additional > noise. OK, pushed 0001 to HEAD and b6e1157e7d + 0001 to 16. > I've looked at 0002 at various points in time and I think it looks > generally reasonable. I think your removal of a couple of newlines > (where originally two appear in sequence) is unwarranted; that the name > to_json[b]_worker is ugly for exported functions (maybe "datum_to_json" > would be better, or you may have better ideas); Went with datum_to_json[b]. Created a separate refactoring patch for this, attached as 0001. Created another refactoring patch for the hunks related to renaming of a nonterminal in gram.y, attached as 0002. > and that the omission of > the stock comment in the new stanzas in FigureColnameInternal() is > strange. Yes, fixed. > But I don't have anything serious. Do add some ecpg tests ... Added. > Also, remember to pgindent and bump catversion, if you haven't already. Will do. Wasn't sure myself whether the catversion should be bumped, but I suppose it must be because ruleutils.c has changed. Attaching latest patches. Will push 0001, 0002, and 0003 on Monday to avoid worrying about the buildfarm on a Friday evening. -- Thanks, Amit Langote EDB: http://www.enterprisedb.com

Вложения

- v10-0002-Rename-a-nonterminal-used-in-SQL-JSON-grammar.patch

- v10-0006-Claim-SQL-standard-compliance-for-SQL-JSON-featu.patch

- v10-0003-Add-more-SQL-JSON-constructor-functions.patch

- v10-0005-JSON_TABLE.patch

- v10-0004-SQL-JSON-query-functions.patch

- v10-0001-Some-refactoring-to-export-json-b-conversion-fun.patch

hi

based on v10*.patch. questions/ideas about the doc.

> json_exists ( context_item, path_expression [ PASSING { value AS varname } [, ...]] [ RETURNING data_type ] [ { TRUE

|FALSE | UNKNOWN | ERROR } ON ERROR ])

> Returns true if the SQL/JSON path_expression applied to the context_item using the values yields any items. The ON

ERRORclause specifies what is returned if an error occurs. Note that if the path_expression is strict, an error is

generatedif it yields no items. The default value is UNKNOWN which causes a NULL result.

only SELECT JSON_EXISTS(NULL::jsonb, '$'); will cause a null result.

In lex mode, if yield no items return false, no error will return,

even error on error.

Only case error will happen, strict mode error on error. (select

json_exists(jsonb '{"a": [1,2,3]}', 'strict $.b' error on error)

so I came up with the following:

Returns true if the SQL/JSON path_expression applied to the

context_item using the values yields any items. The ON ERROR clause

specifies what is returned if an error occurs, if not specified, the

default value is false when it yields no items.

Note that if the path_expression is strict, ERROR ON ERROR specified,

an error is generated if it yields no items.

--------------------------------------------------------------------------------------------------

/* --first branch of json_table_column spec.

name type [ PATH json_path_specification ]

[ { WITHOUT | WITH { CONDITIONAL | [UNCONDITIONAL] } } [ ARRAY

] WRAPPER ]

[ { KEEP | OMIT } QUOTES [ ON SCALAR STRING ] ]

[ { ERROR | NULL | DEFAULT expression } ON EMPTY ]

[ { ERROR | NULL | DEFAULT expression } ON ERROR ]

*/

I am not sure what " [ ON SCALAR STRING ]" means. There is no test on this.

i wonder how to achieve the following query with json_table:

select json_query(jsonb '"world"', '$' returning text keep quotes) ;

the following case will fail.

SELECT * FROM JSON_TABLE(jsonb '"world"', '$' COLUMNS (item text PATH

'$' keep quotes ON SCALAR STRING ));

ERROR: cannot use OMIT QUOTES clause with scalar columns

LINE 1: ...T * FROM JSON_TABLE(jsonb '"world"', '$' COLUMNS (item text ...

^

error should be ERROR: cannot use KEEP QUOTES clause with scalar columns?

LINE1 should be: SELECT * FROM JSON_TABLE(jsonb '"world"', '$' COLUMNS

(item text ...

--------------------------------------------------------------------------------

quote from json_query:

> This function must return a JSON string, so if the path expression returns multiple SQL/JSON items, you must wrap the

resultusing the

> WITH WRAPPER clause.

I think the final result will be: if the RETURNING clause is not

specified, then the returned data type is jsonb. if multiple SQL/JSON

items returned, if not specified WITH WRAPPER, null will be returned.

------------------------------------------------------------------------------------

quote from json_query:

> The ON ERROR and ON EMPTY clauses have similar semantics to those clauses for json_value.

quote from json_table:

> These clauses have the same syntax and semantics as for json_value and json_query.

it would be better in json_value syntax explicit mention: if not

explicitly mentioned, what will happen when on error, on empty

happened ?

-------------------------------------------------------------------------------------

> You can have only one ordinality column per table

but the regress test shows that you can have more than one ordinality column.

----------------------------------------------------------------------------

similar to here

https://git.postgresql.org/cgit/postgresql.git/tree/src/test/regress/expected/sqljson.out#n804

Maybe in file src/test/regress/sql/jsonb_sqljson.sql line 349, you can

also create a table first. insert corner case data.

then split the very wide select query (more than 26 columns) into 4

small queries, better to view the expected result on the web.

On Fri, Jul 21, 2023 at 7:33 PM Amit Langote <amitlangote09@gmail.com> wrote: > On Fri, Jul 21, 2023 at 1:02 AM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote: > > On 2023-Jul-21, Amit Langote wrote: > > > > > I’m thinking of pushing 0001 and 0002 tomorrow barring objections. > > > > 0001 looks reasonable to me. I think you asked whether to squash that > > one with the other bugfix commit for the same code that you already > > pushed to master; I think there's no point in committing as separate > > patches, because the first one won't show up in the git_changelog output > > as a single entity with the one in 16, so it'll just be additional > > noise. > > OK, pushed 0001 to HEAD and b6e1157e7d + 0001 to 16. > > > I've looked at 0002 at various points in time and I think it looks > > generally reasonable. I think your removal of a couple of newlines > > (where originally two appear in sequence) is unwarranted; that the name > > to_json[b]_worker is ugly for exported functions (maybe "datum_to_json" > > would be better, or you may have better ideas); > > Went with datum_to_json[b]. Created a separate refactoring patch for > this, attached as 0001. > > Created another refactoring patch for the hunks related to renaming of > a nonterminal in gram.y, attached as 0002. > > > and that the omission of > > the stock comment in the new stanzas in FigureColnameInternal() is > > strange. > > Yes, fixed. > > > But I don't have anything serious. Do add some ecpg tests ... > > Added. > > > Also, remember to pgindent and bump catversion, if you haven't already. > > Will do. Wasn't sure myself whether the catversion should be bumped, > but I suppose it must be because ruleutils.c has changed. > > Attaching latest patches. Will push 0001, 0002, and 0003 on Monday to > avoid worrying about the buildfarm on a Friday evening. And pushed. Will post the remaining patches after addressing jian he's comments. -- Thanks, Amit Langote EDB: http://www.enterprisedb.com

Hi, Thank you for developing such a great feature. The attached patch formats the documentation like any other function definition: - Added right parenthesis to json function calls. - Added <returnvalue> to json functions. - Added a space to the 'expression' part of the json_scalar function. - Added a space to the 'expression' part of the json_serialize function. It seems that the three functions added this time do not have tuples in the pg_proc catalog. Is it unnecessary? Regards, Noriyoshi Shinoda -----Original Message----- From: Amit Langote <amitlangote09@gmail.com> Sent: Wednesday, July 26, 2023 5:10 PM To: Alvaro Herrera <alvherre@alvh.no-ip.org> Cc: Andrew Dunstan <andrew@dunslane.net>; Erik Rijkers <er@xs4all.nl>; PostgreSQL-development <pgsql-hackers@postgresql.org>;jian he <jian.universality@gmail.com> Subject: Re: remaining sql/json patches On Fri, Jul 21, 2023 at 7:33 PM Amit Langote <amitlangote09@gmail.com> wrote: > On Fri, Jul 21, 2023 at 1:02 AM Alvaro Herrera <alvherre@alvh.no-ip.org> wrote: > > On 2023-Jul-21, Amit Langote wrote: > > > > > I’m thinking of pushing 0001 and 0002 tomorrow barring objections. > > > > 0001 looks reasonable to me. I think you asked whether to squash > > that one with the other bugfix commit for the same code that you > > already pushed to master; I think there's no point in committing as > > separate patches, because the first one won't show up in the > > git_changelog output as a single entity with the one in 16, so it'll > > just be additional noise. > > OK, pushed 0001 to HEAD and b6e1157e7d + 0001 to 16. > > > I've looked at 0002 at various points in time and I think it looks > > generally reasonable. I think your removal of a couple of newlines > > (where originally two appear in sequence) is unwarranted; that the > > name to_json[b]_worker is ugly for exported functions (maybe "datum_to_json" > > would be better, or you may have better ideas); > > Went with datum_to_json[b]. Created a separate refactoring patch for > this, attached as 0001. > > Created another refactoring patch for the hunks related to renaming of > a nonterminal in gram.y, attached as 0002. > > > and that the omission of > > the stock comment in the new stanzas in FigureColnameInternal() is > > strange. > > Yes, fixed. > > > But I don't have anything serious. Do add some ecpg tests ... > > Added. > > > Also, remember to pgindent and bump catversion, if you haven't already. > > Will do. Wasn't sure myself whether the catversion should be bumped, > but I suppose it must be because ruleutils.c has changed. > > Attaching latest patches. Will push 0001, 0002, and 0003 on Monday to > avoid worrying about the buildfarm on a Friday evening. And pushed. Will post the remaining patches after addressing jian he's comments. -- Thanks, Amit Langote EDB: http://www.enterprisedb.com

Вложения

Hello, On Thu, Jul 27, 2023 at 6:36 PM Shinoda, Noriyoshi (HPE Services Japan - FSIP) <noriyoshi.shinoda@hpe.com> wrote: > Hi, > Thank you for developing such a great feature. The attached patch formats the documentation like any other function definition: > - Added right parenthesis to json function calls. > - Added <returnvalue> to json functions. > - Added a space to the 'expression' part of the json_scalar function. > - Added a space to the 'expression' part of the json_serialize function. Thanks for checking and the patch. Will push shortly. > It seems that the three functions added this time do not have tuples in the pg_proc catalog. Is it unnecessary? Yes. These are not functions that get pg_proc entries, but SQL constructs that *look like* functions, similar to XMLEXISTS(), etc. -- Thanks, Amit Langote EDB: http://www.enterprisedb.com

Op 7/21/23 om 12:33 schreef Amit Langote: > > Thanks for taking a look. > Hi Amit, Is there any chance to rebase the outstanding SQL/JSON patches, (esp. json_query)? Thanks! Erik Rijkers

Hi,

On Fri, Aug 4, 2023 at 19:01 Erik Rijkers <er@xs4all.nl> wrote:

Op 7/21/23 om 12:33 schreef Amit Langote:

>

> Thanks for taking a look.

>

Hi Amit,

Is there any chance to rebase the outstanding SQL/JSON patches, (esp.

json_query)?

Yes, working on it. Will post a WIP shortly.

Thanks, Amit Langote

EDB: http://www.enterprisedb.com

EDB: http://www.enterprisedb.com

Hi,

On Sun, Jul 23, 2023 at 5:17 PM jian he <jian.universality@gmail.com> wrote:

> hi

> based on v10*.patch. questions/ideas about the doc.

Thanks for taking a look.